Why and When to Use Surveys

- 497 shares

- 1 year ago

UX surveys, or user experience surveys are tools that gather information about users' feelings, thoughts and behaviors related to a product or service. Surveys are typically a set of questions and can cover a range of topics, depending on the purpose of the research. Designers or researchers analyze survey results to better understand how users interact with a system, application or website and work to improve it.

In this video, William Hudson, User Experience Strategist and Founder of Syntagm Ltd, explains the significance of UX surveys.

User experience (UX) surveys play a crucial role to help design teams understand users’ needs, preferences and behaviors. When UX researchers or designers gather feedback as qualitative research data and quantitative research data from many participants, they can gain valuable insights. Survey responses can inform valuable user experience design decisions about digital products or services. From there, designers can fine-tune user interface (UI) elements and more to create a better user experience and a high level of user satisfaction, and so help to win loyal customers.

In this video, Alan Dix, Author of the bestselling book Human-Computer Interaction and Director of the Computational Foundry at Swansea University, explains the difference between quantitative and qualitative research:

UX surveys are important for several reasons. First, they provide a cost-effective way to collect valuable insights from users. When companies understand users’ needs and expectations, they can make properly informed decisions. For example, when a brand takes user feedback and the insights it reveals, it can come out with new features that users of their existing mobile app are more likely to appreciate or a better visual design all around.

Second, UX surveys help identify pain points and areas of improvement in the user experience. This lets companies enhance the overall customer satisfaction and loyalty. Last—but not least—when brands conduct UX surveys, they help themselves stay relevant to their customers and drive customer retention. That is a crucial factor for sustainable business growth and exceptional customer service. It’s also vital to help launch a new product when stakeholders have invested so much in it.

Surveys are a particularly useful UX research option. When UX designers or researchers consider research methods for a product design or project, they need to approach and conduct the activities correctly. For example, there are best practices to guide other approaches and types of user research, such as user interviews. Similarly, when researchers or designers decide to use surveys, they'll need to know many factors. These include how to recruit participants and ask the right questions (for example, open questions, closed questions, checkbox responses, rating scale). The skill is in how well they can reach their respondents so they give real insights into these users’ behavior and other relevant areas like potential improvements for the customer service team.

Feedback from users and customers is a vital gauge of where brands stand in the marketplace.

© Interaction Design Foundation, CC BY-SA 4.0

© Interaction Design Foundation, CC BY-SA 4.0

Here are the main types of UX surveys:

CES surveys assess how simple it is for customers to complete tasks with a brand. This is a score that determines if it was easy or difficult for a customer to use a product or get help from a service team. Ease of experience can be more revealing than overall satisfaction. For instance, after a customer service interaction happens, a good question could be:

"How easy was resolving your issue with our customer support?"

Very Difficult

Difficult

Moderate

Easy

Very Easy

This format helps brands understand how easy the interaction was from the customer's viewpoint—and it can be an excellent tool to identify areas for improvement.

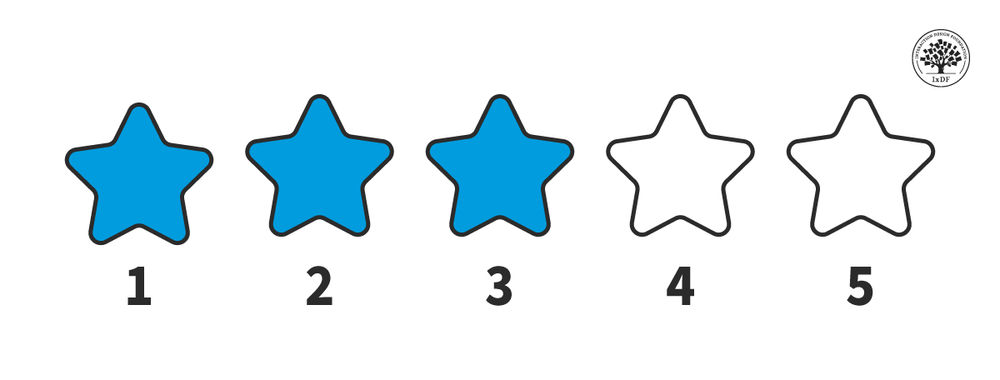

A CSAT survey measures how content customers are with a company.

The chief question is, "How satisfied are you with our service?"

Answers can range from 1 ("very dissatisfied") to 5 ("very satisfied").

CSAT surveys focus on individual interactions. These could be purchasing or using customer support, and the surveys use numeric scales to track satisfaction levels over time. Such surveys help brands understand customers’ needs and locate issues with products or services. They also let designers or researchers classify customers based on their satisfaction levels, which helps regarding targeted improvements for a service or product team.

NPS surveys are quick and simple. They revolve around one question: “On a scale from 0 to 10, how likely are you to recommend this product/company to a friend or colleague?”. Researchers or designers can then take the score and conduct respondent segmentation into one of three categories:

Promoters (Score 9-10): The biggest fans, they’ll likely recommend the product.

Passives (Score 7-8): These customers are satisfied with the brand’s product or service, but could easily switch to a competitor.

Detractors (Score 0-6): These are unhappy customers who could hurt the brand through negative word-of-mouth.

Researchers—or designers—can calculate the NPS score if they subtract the Detractors' percentage from the Promoters'. This will show customer loyalty and areas for improvement.

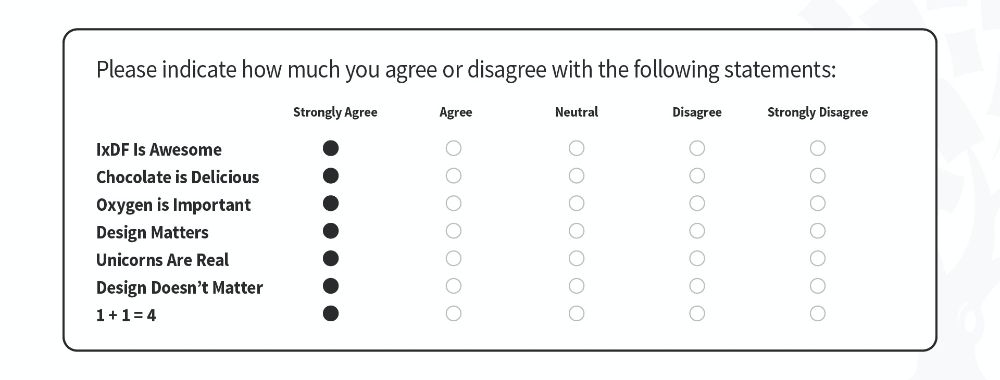

Well-designed, closed-ended questions are easy ones for respondents to answer. Users pick from predefined options like checkboxes, scales or radio buttons—and these surveys are suitable to gather data with. For example, they could be exit surveys that ask users about how their shopping experience was. The answers they give can yield actionable data—customer preferences or standard problems.

For example, a question could be: "How satisfied are you with our delivery speed?"

The choices could be:

Very Satisfied

Satisfied

Neutral

Dissatisfied

Very Dissatisfied

Users just select an option that best describes their feelings. This is fast for the user—and easy for the company to analyze.

Closed-ended questions offer a quick-and-easy way to capture respondents’ feedback.

© Interaction Design Foundation, CC BY-SA 4.0

Closed-ended questions may offer fixed choices for quick responses, but open-ended questions permit more detailed, free-form answers. These questions ask users or customers for written responses—going into how they feel and what they expect.

It may take designers or researchers more time to analyze the responses they collect from this type of survey. Even so, they’re valuable as they offer nuanced insights.

For instance, "What feature do you wish we had?" is a good question—and one that can lead to ideas for product enhancements that meet users’ needs.

A design team can conduct UX surveys at various stages of the design process. They can put surveys out before, during or after they develop their product or service as a way to complement user testing and more. The timing of the survey depends on the specific goals and objectives of the research involved in the UX design project.

Online surveys offer quite a number of advantages. For one thing, there’s the convenience of being able to reach a wider audience and collect data in real-time. What’s more, online surveys allow for more customization and flexibility when it comes to the types of questions that a designer or researcher can ask. That’s especially useful when the design team wants to capture detailed feedback on user behavior or motivations.

Surveys do have some drawbacks, though. For one thing, there’s the potential for biased responses that come from survey fatigue or users’ lack of understanding of questions. What’s more, there’s a limit to how able surveys actually are to get detailed data on user behavior or motivation. Last—but not least—there’s always a risk that respondents may not answer honestly or accurately due to privacy concerns or other factors. Associated with this is the practice of straightlining—that’s where respondents lose the motivation to reply honestly and click or mark the same place or column to get through a survey as fast as possible.

When a designer—or researcher—conducts a UX survey, it’s a strategic decision to understand various aspects of user interaction with a product or service. And here are some vital scenarios and reasons to implement them:

Many researchers use surveys to validate and confirm if their qualitative findings, such as those from observations, apply to a wider user group. Qualitative methods typically include a small number of participants, and it is not advisable to base product or business decisions on their insights alone. Quantitative methods such as surveys give teams the confidence in their findings to make impactful decisions. This approach is called triangulation and, in particular, methodological triangulation, as William Hudson explains in this video.

Designers or researchers may find UX surveys better suited for them to assess existing products than development ones. These surveys can bring in a collection of insights on how well the target audience receives a feature or service. Feedback from such surveys can guide adjustments or additions to make to a product or service.

For example, if customers think an existing feature lacks some functionality, a designer can focus on working to improve it. UX surveys offer valuable data to refine a product—and so better align it with customer needs and expectations.

It’s vital to find and weed out pain points—and so then be able to create a user-friendly experience. And UX surveys naturally provide direct feedback from users about issues that bother them. These could include matters that the design team is aware of that actually do make the customer experience less enjoyable or efficient.

For example, users might point out that they find the checkout process too complicated. These insights provide specific areas to focus design improvement efforts upon, and so help to fix problems and prove to users that their feedback is valuable.

A well-timed UX survey can reveal how well a brand meets customer expectations after a critical interaction like a purchase or customer service call.

Long-term success pivots on customer loyalty. NPS surveys, which are a kind of UX survey, help gauge this. When design teams identify promoters, passives and detractors, they can help tailor customer retention and referral strategies. If they see a drop in loyalty scores, then that’s a spur to dig deeper into potential issues.

Journey mapping visually represents a user's interactions with a product or service, and it tracks the entire experience—going from the first touchpoint to the final interaction. A well-designed UX survey can give up insights at multiple stages of this journey.

For example, CES surveys are really useful at various checkpoints to measure ease of use. CSAT surveys can check satisfaction at critical touchpoints like purchase or support.

Open-ended questions can offer qualitative insights into why users make specific choices. These answers fill gaps in the journey map that analytics data might lack.

In this video, Matt Snyder, Head of Product and Design at Hivewire, explains journey mapping.

If a brand is planning to rebrand, make a major update or conduct some other great change, then a UX survey is an essential tool. It helps get a good idea of customer sentiment and expectations beforehand.

When a design team collects survey data, they can account better for adjustments that run in line with customers’ needs. So, they can lessen the risk of there being any negative backlash.

The need for improvement is a neverending one—and regular UX surveys create a feedback loop to help brands track user sentiment and performance metrics. They permit ongoing adjustments on the basis of real-world usage and keep the product better aligned with users’ needs and expectations—plus, they sustain a successful marketplace performance.

Product Discovery Coach, Teresa Torres explains continuous improvement in this video:

© Interaction Design Foundation, CC BY-SA 4.0

When designers or researchers conduct a UX survey, it calls for careful planning and execution for them to get actionable insights. Here are six best practices:

People’s time is valuable, and long surveys can put off participants. A quick and concise survey keeps a participant engaged—and it’s important to focus on the essential questions and take any unneeded ones out.

Keep the survey to 5-10 essential questions.

Use clear and concise language.

Preview the survey with a friend or colleague to get feedback on the length.

It’s crucial to make sure that survey questions are relevant—to collect valuable data. If questions stray off-topic, they might confuse—or even annoy—participants. So, keep questions well focused and that will help keep the insights goal-oriented.

Define the target audience and goals first; then write questions.

Don’t use generic questions that don't relate to the product or service.

Focus on specific user experiences that are in line with the objectives.

Give users “not applicable/don’t know” answer options for all closed questions.

Bias is natural for humans, but it can distort the results—even greatly—and lead to misguided conclusions. The objective framing of questions is something that helps to collect unbiased replies. Some common biases include:

Question order bias: This affects responses according to the sequence of questions.

Confirmation bias: When the survey asks questions that affirm what the asker already believes.

Primacy bias: People choose the first options they see.

Recency bias: People choose the last options they see.

Hindsight bias: Respondents claim that they could foresee events.

Assumption bias: When the asker assumes respondents know certain information.

Clustering bias: People believe there are patterns where none exist.

Designers or researchers can remedy these if they:

Avoid leading questions.

Use neutral language.

Consider asking an expert to review questions for potential bias.

Test the survey on a small group before they launch it.

Multiple-choice questions and rating scales may be excellent ways to collect numerical data, but open-ended questions can bring in really rich, qualitative insights. Because the blend can make for a more comprehensive view of customer sentiment, it’s vital to:

Use a mixture of question types according to the information that’s needed.

Leverage open-ended questions for in-depth insights and multiple-choice for quick feedback.

Consider scale questions to measure user satisfaction or preferences.

An accessible survey is one that helps bring in a wide range of perspectives. If users or customers—including those with disabilities—can access a survey, they'll provide a much more complete and diverse set of insights. And so, they’ll be able to improve the quality of the data—and the resultant decision-making. So, it’s essential to:

Apply easy-to-read fonts and adequate color contrast.

Provide alternative text to go with images.

Make sure that users can navigate the survey using keyboard controls.

Test the accessibility features of the survey.

Avoid complex layouts and matrix-style questions.

Watch this video to understand how essential accessibility is in design:

Another critical point is that it’s crucial to prioritize participants' privacy to build trust. When people feel confident that their data is safe, they'll be far more ready to engage fully in a survey. A strong privacy policy meets legal standards. It’s something that boosts participation rates, too, and enriches the quality of the insights. So, it’s important to:

State the privacy policy at the beginning of the survey.

Use secure platforms to conduct the survey.

Assure participants their responses will stay confidential.

Put sensitive or personal questions near the end.

© Interaction Design Foundation, CC BY-SA 4.0

Designers or researchers should follow these steps to design, distribute and analyze surveys for actionable insights.

To define what they aim to discover, designers—or researchers—should consider:

The main goal: Is it to measure user satisfaction or to focus on something else?

Relevant user behaviors: Will the survey target frequent users, new users—or both?

Key metrics: Is it important to examine completion rates, time spent or other indicators?

New feature opinions: Is the input really necessary for new rolled-out features?

Pain points: Are user frustrations and roadblocks important things to identify?

When designers or researchers are clear about the objectives, it will guide every next step and ensure they align the results with project goals. Well-defined goals will streamline the survey's structure—and they’ll help craft relevant questions. The sharper focus will also help in the analysis of the data that comes later on.

It’s vital to identify the target audience because of:

Product awareness: To gauge how much the audience actually knows about the product or service. This shapes the depth and detail of questions.

Interests: Understand what topics engage the audience. That insight’s helpful to make questions interesting.

Language: A professional audience may understand industry jargon—while a general one may not. So, it’s a vital point to pick words carefully.

Region: Geography can affect preferences and opinions—so it may be necessary to localize questions.

A solid understanding of the target audience helps designers or researchers to write good questions—questions that users or customers can relate to well. It’s something that leads to higher engagement and more accurate data in user research. Designers can make customer or user personas and a user journey around them, too.

Questions really are the heart of a survey. So, it’s vital to write engaging, clear and unbiased ones to get the insights needed. Therefore, what’s crucial is to:

Use different types, like multiple-choice for quick feedback or open-ended for deeper insights.

Use simple language, don’t use jargon and make sure that every question serves a clear purpose.

Be mindful of potential biases and keep the questions neutral.

Keep the users interested and guide them onwards through the survey.

It’s a critical point to pick the right tool for the UX survey—for good data collection and analysis. A Google Form provides a quicker way to get started with UX surveys. Here’s why:

Ease of use: Google Forms are user-friendly—and it’s easy to create a survey quickly and without much technical knowledge.

Customization: It offers various themes and allows question branching based on prior answers.

Integration: Google Forms integrates with other Google services like Google Sheets for real-time data tracking.

Free: For basic features, it's free of charge.

Data analysis: Offers basic analytics like pie charts and bar graphs for really helpful, quick insights.

It’s possible to use specialized UX research tools like SurveyMonkey with more advanced features, too. It’s important to consider what the objectives are—and what the target audience need. Then, a designer or researcher should choose a tool that serves those needs best.

Pilot testing is an invaluable step to refine the UX survey. It gives a chance to uncover unforeseen issues with the survey design, questions or technology.

A researcher or designer should recruit participants in small numbers to test the survey. Ask internal team members for help—if it’s needed—or contact professionals via LinkedIn. Use this test survey to understand their experience and make any adjustments that are necessary. This can really make the difference between a good survey and a great one. Plus, it helps iron out any issues and makes sure that a smoother product experience happens for the primary audience.

To launch the survey means more than to make it live. It calls for picking the proper channels, timing—and even incentives. When a designer or researcher promotes the survey, they make sure that it reaches the intended audience and encourages these people to get involved.

It’s an essential to consider the time of day, week and even platform that aligns with the audience—so, plan every aspect of the launch to maximize participation.

Data analysis transforms raw data into valuable insights—and it’s vital to make sense of things. So, it’s crucial to use analytical tools to sort, filter and interpret the data in the context of the objectives. Look for patterns and correlations but also for unexpected discoveries.

The designer’s or researcher’s interpretation should lead to actionable insights that guide product or service improvement. This step transforms the effort of surveying real value for the project that’s at hand.

Last—but not least—it’s time to share the findings and implement changes. This completes the process. So, it’s really important to create comprehensive reports and engage stakeholders with the insights. Sharing is something that nurtures a shared understanding—and it sets the stage for informed decisions.

The design team should plan and iterate on improvements based on the insights they get—and use the learnings for continuous enhancement.

Each step is a building block—one that contributes to a successful and insightful user experience survey. And when designers or researchers follow this roadmap, it helps make sure that what they create is an engaging, relevant and actionable UX research survey.

Overall, UX surveys are superb opportunities to capture essential feedback that can shed light on the right areas to improve—or develop well—in a product or service. The key is to choose, structure, fine-tune and apply them well—and digest the feedback mindfully.

Take our course Data-Driven Design: Quantitative UX Research.

Find out more about triangulation and how you can use it with surverys to create effective personas in our course, Personas and User Research: Design Products and Services People Need and Want.

Consult our Ensuring Quality piece for additional insights.

Read Mastering UX surveys: Best practices and how to avoid biases by Andrés Ochoa for more details.

See How to use UX surveys to gain product experience insights by Hotjar for further information.

Read User Experience(UX) Survey Questions: 60+ Examples (+Template) by Pragadeesh for more helpful insights and examples.

For more on straightlining, read Survey Straightlining: What is it? How can it hurt you? And how to protect against it by Dave Vannette.

Put in questions that uncover user needs, preferences and frustrations. Focus on close-ended questions for quantitative data and open-ended questions for qualitative insights. And rate satisfaction levels on specific features—and ask about the frequency of use. Include demographic questions so you can understand the user base better. Don’t include leading or ambiguous questions—to make sure that responses aren’t biased.

William Hudson explains how to write good questions in this video:

Aim for one that participants can complete in 5 to 10 minutes. This typically translates to 10 to 15 questions—and when you keep surveys short and focused, it increases the completion rate. What’s more, it makes sure there’s a better quality of responses. Use clear and concise questions so you cover essential topics and don’t overwhelm participants. Prioritize questions that yield the most valuable insights for your design decisions.

Designers need to avoid these common mistakes in UX survey design—mistakes that would happen if they:

Ask leading questions that bias the respondent's answers.

Use jargon or technical language—that respondents mightn’t understand.

Include too many open-ended questions—something that can overwhelm participants and reduce completion rates.

Make the survey too long—leading to participant fatigue and drop-offs.

Neglect to pilot the survey to catch issues before wider distribution happens.

Forget to ensure anonymity—which can influence the honesty of responses.

Overlook how important a clear and concise introduction is—one that explains the survey's purpose and estimated completion time.

William Hudson explains how to write good questions in this video:

To effectively distribute a UX survey, follow these steps:

Identify your target audience based on your UX research’s goals.

Pick the right channels for distribution—like email, social media or your product's website—where your target audience is most active.

Provide incentives—like a chance to win a gift card or access to premium content—to bump up participation rates.

Use clear and compelling communication to explain the purpose of the survey and how participants' feedback is going to actually be used.

Make sure the survey is mobile-friendly—that's because many users will likely access it on their smartphones.

Follow up with reminders—but don’t spam participants—to encourage those who haven’t yet completed the survey.

Take our course Data-Driven Design: Quantitative UX Research.

To increase UX survey participation rates, consider these incentives:

Gift cards or vouchers for popular retailers.

Entry into a prize draw for a larger prize—like electronics or a subscription service.

Exclusive access to premium content or features within your product or service.

Discounts or coupons for your product or service.

A summary of the survey findings—offering valuable insights to participants.

A donation to a charity for each completed survey—and so appeal to participants’ altruism.

Clearly communicate the incentive in the survey invitation to motivate participants to get involved. What’s more, make sure the chosen incentive is in line with your target audience's interests.

Take our course Data-Driven Design: Quantitative UX Research.

To analyze UX survey results—and do it well—try following these steps:

Clean the data: Take out incomplete responses and outliers that can skew results.

Do quantitative analysis: For closed-ended questions, use statistical tools to calculate averages, percentages and patterns—it'll help in understanding general trends and user preferences.

Do qualitative analysis: Analyze open-ended responses by categorizing them into themes or topics. That’s going to uncover deeper insights into user motivations, frustrations and experiences.

Identify key findings: Look for commonalities and major differences in the data. And focus on insights that relate to your research objectives directly.

Cross-reference responses: Compare responses across different demographic groups or user segments—to spot specific needs or issues.

Draw conclusions: From your analysis, summarize the main insights that are going to inform your UX design decisions. And think about both the quantitative data and qualitative feedback—to get a well-rounded understanding of your users.

Recommend actions: Suggest actionable steps to address the findings. These are things that could include design changes, new features or areas for further research.

Remember—the goal of analyzing UX survey results isn’t just to collect data; it’s to gain actionable insights that can improve the user experience, too.

Get an idea of what actionable insights look like in our piece Rating Scales in UX Research: The Ultimate Guide.

Stick to these practices:

Inform participants: Explain the purpose of the survey—clearly—and how you’ll use the responses. Include a privacy statement that outlines the measures you’re taking to protect their information.

Anonymize responses: Collect responses anonymously whenever you can. And if you must link responses to individuals for follow-up, make sure that there’s separate storage of this data from the survey responses to protect anonymity.

Use secure platforms: Choose survey platforms that comply with data protection regulations—such as GDPR or CCPA—and these platforms should offer encryption and secure data storage.

Limit personal data: Only collect personal information that’s absolutely necessary for your research objectives. And certainly don’t ask for sensitive information that could put participants at risk.

Data minimization: Regularly review the data you’ve collected—and delete anything that’s no longer necessary for your research goals.

Educate your team: Make sure everyone who’s involved in the survey process understands the importance of privacy—and knows how to handle participant data responsibly.

Offer opt-outs: Let participants skip questions they’re uncomfortable answering—and provide an option to withdraw from the survey at any point.

Take our course Data-Driven Design: Quantitative UX Research.

The timing has a great impact on the quality of user feedback. Do the survey too early, and it may result in feedback that doesn't fully reflect the user's experience. That’s because they may not have had enough time to explore and evaluate your product thoroughly. To wait too long to distribute the survey—though—can lead to diminished recall and less precise feedback. That’s because the details of the user experience might no longer be fresh in the respondents' minds.

Ideally, the survey should follow a meaningful interaction with the product—like completing a purchase or reaching a milestone within an app. This makes sure that the feedback’s relevant and is based on concrete experiences. What’s more, to send surveys after updates or big changes can provide insights into how these alterations actually affect user satisfaction and behavior.

As another point—consider the user's journey and pick moments when they’re likely to be more receptive and have the time to give thoughtful responses. Avoid times of peak activity or potential stress—it’ll ensure higher response rates and more accurate feedback.

Strategically timed surveys can yield richer, more actionable insights—and help designers and product managers to make informed decisions that really do enhance the user experience.

Take our course Data-Driven Design: Quantitative UX Research.

UX researchers handle survey response biases through several strategies—namely, if they:

Design clear questions: They craft questions that are straightforward and unbiased, avoiding leading words that might influence the responses.

Randomize question order: This keeps the order of questions from affecting the answers—especially in surveys with multiple choices.

Pilot test: Researchers conduct pilot tests with a small audience so they can spot—and correct—any biases before they distribute the survey widely.

Use a mix of question types: To put in both open-ended and closed-ended questions helps balance the quantitative data with qualitative insights. It’s something that lowers the risk of skewing the results towards one type of response.

Anonymous responses: To guarantee anonymity is a major plus—it encourages honesty, especially in questions that might lead to socially desirable answers.

Apply statistical techniques: After researchers collect responses, they use statistical methods to find—and adjust for—any biases that might’ve influenced the results.

Diversify sampling: They make sure that the survey reaches a diverse audience—to keep biases that are associated with a particular demographic group from dominating the results.

Santosa, P. I. (2016). Measuring User Experience During a Web-based Survey: A Case of Back-to-Back Online Surveys. Procedia Computer Science, 72, 590-597.

This publication has been influential in the field of user experience (UX) research, particularly in the context of web-based surveys. The study explores the measurement of user experience during a web-based survey, using a case of back-to-back online surveys. The author investigates the impact of survey design and user interface on the user experience, providing insights into how to create more engaging and user-friendly survey experiences. The findings of this study can help researchers and practitioners in the field of UX design to improve the overall quality and effectiveness of web-based surveys—leading to better data collection and more reliable insights

Tullis, T., & Albert, B. (2013). Measuring The User Experience: Collecting, analyzing, and presenting usability metrics. Newnes.

This book is a comprehensive guide to conducting user experience (UX) surveys and analyzing the resulting data. It covers various UX metrics, such as task success rate, time on task and satisfaction, and provides best practices to design, administer and interpret UX surveys. The book is widely cited in the UX research community as a go-to resource for understanding and applying UX measurement techniques.

These questions form a comprehensive framework for understanding various aspects of the user experience. Remember to use only a few of these to keep response rates high.

“How did you find our website/app?”

“What was your primary goal in visiting our site today? Did you achieve it?”

“How easy was it to navigate our site?”

“What features did you use most?”

“Were there any features that needed to be clarified or easier to use?”

“How would you rate your overall experience?”

“What would you change about our website or app?”

“How likely are you to recommend our product to a friend or colleague?”

“What other products or services would you like us to offer?”

“Did you encounter any technical issues?”

“What is your preferred payment/delivery method?”

“What is your preferred method of contact for support?”

“How would you describe our product in one sentence?"

“How does our product compare to similar ones in the market?”

“Were our support resources (FAQs, live chat) helpful?”

“How could our product better meet your needs in the future?”

“How did you find the speed of the site?”

“What language options would you prefer for our website/app?”

“Would you like a follow-up from our team regarding your feedback?”

“Would you be interested in future updates or newsletters?”

Here’s a list of the eight best user experience survey templates that are free to use:

Find out what clients think about your business. Use this form as a case study to collect thoughts on customer service and more. Make changes to the template to focus on specific aspects of customer interaction.

Experts have made this ready-to-use template to improve your software's Net Promoter Score (NPS). Get critical insights in to elevate your product.

Easily gauge customer loyalty with this template. Customers rate their likelihood of recommending you from 0 to 10. Adapt the template to explore additional areas.

Assess the performance of your customer service team. Adapt the survey to delve into aspects that you’re particularly interested in.

Send this brief survey to understand customer perceptions. It encourages customers to elaborate on their answers. Make adjustments to fit your needs.

Use this template to collect comments on your products. It aims to identify issues and suggest resolutions.

Collect rapid feedback on your products. Use this form to get concise and actionable comments from customers.

Capture detailed information on how your customers feel about your products and services. It’s useful for pinpointing specific areas for improvement.

Remember, the more you learn about design, the more you make yourself valuable.

Improve your UX / UI Design skills and grow your career! Join IxDF now!

You earned your gift with a perfect score! Let us send it to you.

We've emailed your gift to name@email.com.

Improve your UX / UI Design skills and grow your career! Join IxDF now!

Here's the entire UX literature on UX Surveys by the Interaction Design Foundation, collated in one place:

Take a deep dive into Surveys with our course Data-Driven Design: Quantitative Research for UX .

Master complex skills effortlessly with proven best practices and toolkits directly from the world's top design experts. Meet your expert for this course:

William Hudson: User Experience Strategist and Founder of Syntagm.

We believe in Open Access and the democratization of knowledge. Unfortunately, world-class educational materials such as this page are normally hidden behind paywalls or in expensive textbooks.

If you want this to change, , link to us, or join us to help us democratize design knowledge!