No-UI: How to Build Transparent Interaction

- 873 shares

- 2 years ago

Human-robot interaction (HRI) is the field that studies and designs how people and robots communicate, collaborate, and live together. It involves creating robots that can detect, interpret, and model human actions, signals, and intentions well enough to interact effectively, respond in ways that are consistent with their programmed goals and the user’s context, within the limits of their sensing and decision-making, and operate safely in human environments. In HRI as a UX (user experience) designer, you shape how machines behave around people, how they signal intention, interpret human behavior, and build trust, so robots enhance human activities without friction or danger.

In this video, William Hudson, User Experience Strategist and Founder of Syntagm Ltd., explains how the Fourth Industrial Revolution creates a deeply connected technological world that sets the stage for advanced human-robot interaction.

A robot is a programmable machine that’s capable of carrying out physical tasks or actions automatically, often with some degree of autonomy. Robots can sense their environment, process information, and respond through movement or behavior, either pre-programmed or adaptive. They may look mechanical, humanoid, or entirely utilitarian, but their core function is to perform work, which can be physical, cognitive, or interactive, without needing constant human control. To qualify as a robot, a system must include both a computational (digital) component and a physical or embodied component that acts in the real world. One notable example is Boston Dynamics’ Spot, a four-legged robot used for inspections, construction sites, public-safety applications, and much more. Another is sure to be a household name, too: a Roomba robot vacuum cleaner.

Robots have thrived in the popular imagination ever since Czech writer Karel Čapek coined the term in a 1920 science fiction play. The Czech word “robota” means forced labor or drudgery. In 1954, George Devol invented the first programmable robot, called “Unimate,” and patented it as a “Programmed Article Transfer” machine. Designed for industrial tasks, Unimate could move parts and tools. Early robots soon emerged in the automotive, electronics, and aerospace industries, to automate repetitive or dangerous tasks, increase precision and efficiency, and reduce labor costs in manufacturing.

Advances in robotics by the 2020s have taken things to higher levels. Modern robots exist to support humans, not just to replace labor, but to enhance safety, accessibility, efficiency, and even quality of life, too. Their purpose is increasingly shaped by ethical, human-centered design goals as much as by technological capability. As a robot designer, or designer of robots, you’ll be responsible for ensuring that robots help rather than harm. And when you design robots that serve users and improve their lives, you can realize the envisionment of what robotics is all about. In an era of the Internet of Things and of calm computing, it’s an age when the number of computer-controlled devices in people’s homes and on their persons has risen such that technology really is all around so many users. Rather fittingly, it’s also a perfect time for considerate and effective HRI UX design to help improve countless lives everywhere.

In this video, Alan Dix, Author of the bestselling book “Human-Computer Interaction” and Director of the Computational Foundry at Swansea University, helps you recognize how everyday devices contain numerous embedded computers, giving you insight into the complex, technology-rich contexts that robots must navigate safely and supportively.

To create effective and humane human-robot interaction, you’ll need a structured, user-centered, and safety-aware process. Here are key steps and design practices.

Start by defining who’ll interact with the robot, under what conditions, and for what purpose. Ask yourself:

Who are the users: Their abilities, needs, comfort levels, and expectations?

Where will the interaction happen: Home, workplace, hospital, public space?

What tasks or functions should the robot fulfill: Assistance, collaboration, caregiving, entertainment, mobility, or monitoring?

When you understand the users’ context and goals, you’ll be better able to choose appropriate interaction styles. For instance, a robot in a hospital must prioritize safety and clarity; a companion robot for elders must respect emotional comfort and social cues. HRI is inherently interdisciplinary, including robotics, design, psychology, and ethics: all of these matter and need to come together if you’re going to create robots that truly help people.

In this video, Alan Dix demonstrates how attending to users’ bodies, surrounding actors, environmental conditions, and past events helps you design interactions that truly fit people’s needs, reinforcing why HRI must look beyond the robot’s interface to the full human context.

Robots can communicate using many channels beyond traditional screens, so use modalities that match human expectations and their environment:

Speech or natural language for intuitive commands or conversation.

Gesture, posture, or movement detection: humans signal intent with body language; robots can sometimes recognize and interpret these cues using vision and sensor systems, although accuracy depends on the environment, training data, and task.

Sensors, vision, proximity, force/tactile feedback to detect human presence, motion, or physical interaction.

Haptic or tactile feedback to give users physical sense of robot intent or actions.

By combining multiple channels (multimodal interaction), you increase flexibility and make interaction more natural and accessible between user and robot.

In this video, Alan Dix shows you how different forms of haptic and tactile feedback can shape interaction, highlighting when touch-based modalities succeed or fail.

Copyright holder: On Demand News-April Brown _ Appearance time: 04:42 - 04:57 _ Link: https://www.youtube.com/watch?v=LGXMTwcEqA4

Copyright holder: Ultraleap _ Appearance time: 05:08 - 05:15 _ Link: https://www.youtube.com/watch?v=GDra4IJmJN0&ab_channel=Ultraleap

People need to understand what the robot intends to do and when and why it will do it; otherwise, interaction will feel unpredictable or unsafe. That’s a perfectly natural concern. People are “hard-wired” to suddenly become afraid in the face of uncertainty, and while a robot may not be a bear or tiger, a human mind in self-preservation mode can quickly conjure fears of a merciless machine advancing and not listening to their pleas for reason. To address that, design the robot to:

Signal its status and intentions clearly, such as with lights, sounds, or movement cues, before acting.

Behave in predictable ways: avoid sudden, surprising motions.

Provide consistent feedback when actions succeed or fail; let users know what’s happening.

Design guidelines for HRI emphasize “understandability” and “predictability” as being foundational to safe, effective interaction. Another design concern you’ll need to consider alongside that is how the users’ culture can influence how they might take to a robot’s design and behavior.

In this video, Alan Dix shows you how cultural differences shape how people interpret interface cues, reminding you that clear and predictable behavior depends on designs that make sense across cultures.

Copyright holder: Tommi Vainikainen _ Appearance time: 2:56 - 3:03 Copyright license and terms: Public domain, via Wikimedia Commons

Copyright holder: Maik Meid _ Appearance time: 2:56 - 3:03 Copyright license and terms: CC BY 2.0, via Wikimedia Commons _ Link: https://commons.wikimedia.org/wiki/File:Norge_93.jpg

Copyright holder: Paju _ Appearance time: 2:56 - 3:03 Copyright license and terms: CC BY-SA 3.0, via Wikimedia Commons _ Link: https://commons.wikimedia.org/wiki/File:Kaivokselan_kaivokset_kyltti.jpg

Copyright holder: Tiia Monto _ Appearance time: 2:56 - 3:03 Copyright license and terms: CC BY-SA 3.0, via Wikimedia Commons _ Link: https://commons.wikimedia.org/wiki/File:Turku_-_harbour_sign.jpg

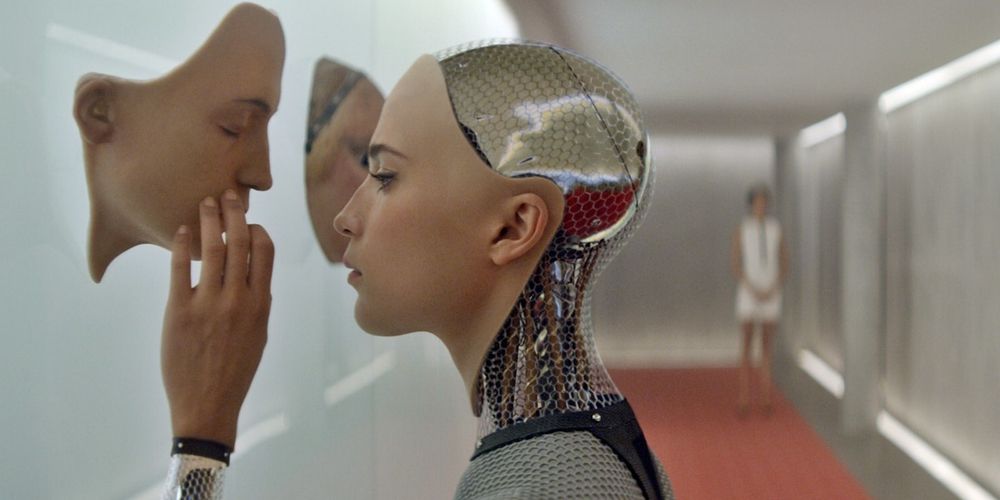

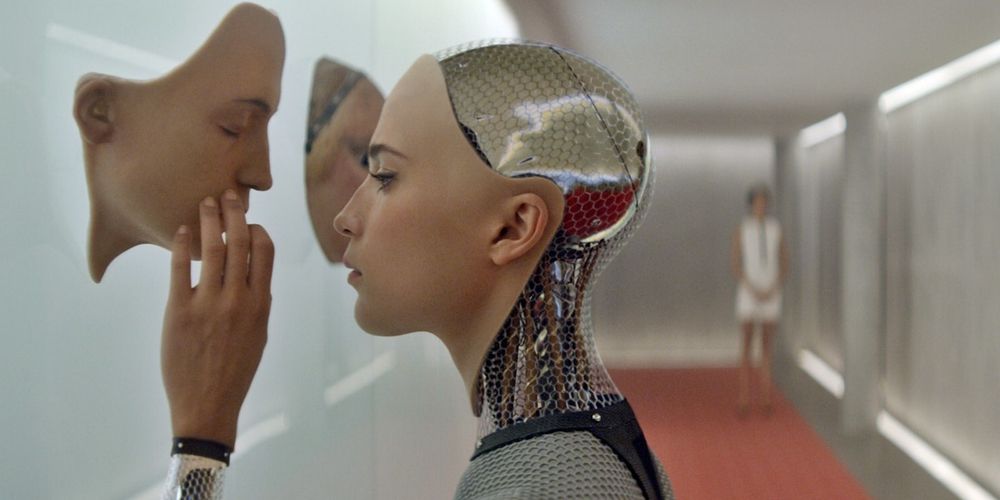

Robots often operate with some level of autonomy, but giving robots full control may make humans feel disempowered or unsafe. Not only for HRI designers, it’s also a massive concern that’s become stamped into the popular imagination, given how it’s a subject that drives science fiction books and movies set in future dystopian settings. So, instead, aim for shared control or collaborative autonomy:

Define clear roles: When the human leads, when the robot leads, and how control shifts.

Let humans override robot decisions easily or intervene when needed.

Adapt automation level to context, so there’s high autonomy when it’s safe and beneficial, and human-in-the-loop whenever uncertainty or risk exists.

This approach, sometimes called “adaptive collaborative control,” treats human and robot as partners, not master and tool. It calls for you to keep a firm grasp of empathy for users as you build foundations on which robots interacting with humans can stand, succeed, and help rather than fall, fail, and hurt.

Discover helpful insights about the essential nature of designer-user empathy as this video shows you how empathetic design helps systems support user goals clearly and calmly: a principle that also guides how humans and robots should share control.

Robots should adapt to human behavior, preferences, and their environment over time, which involves:

Using sensors and machine learning to detect patterns, user habits, and environmental changes.

Allowing the robot to learn and refine behavior: for example, adjusting assistance based on user comfort or past interactions.

Permitting personalization, where users should be able to set preferences, boundaries, or levels of assistance.

A human-centered evaluation, which combines qualitative (user comfort, acceptance) and quantitative (task performance, safety) metrics, helps you iterate design for better alignment with human needs.

Explore the possibilities as this video explains how machine learning enables systems to learn from data and improve over time: a foundation for robots that adapt to users and environments.

Since human-robot interaction involves unpredictable real-world factors, with people, spaces, noise, movement, and social norms, you’ll have to test prototypes outside the lab, in realistic settings. So:

Use observational studies with real users to discover pain points, misunderstandings, or safety risks.

Evaluate usability, comfort, trust, safety, and acceptability over time; long-term use reveals issues lab tests might miss.

Involve interdisciplinary feedback, with designers, engineers, psychologists, and end users, to refine both robot behavior and interaction model.

Remember, at the core of this process is the idea that you’ll ensure robots fit real human life, not just ideal scenarios. That’s one reason designing with personas, research-based representations of real users, is essential.

In this video, William Hudson, shows you why persona stories, grounded in real user research, lead to designs that fit actual human needs rather than idealized assumptions.

Designing good HRI unlocks major benefits and transforms robots from mere tools into cooperative, helpful agents that enhance human life and capabilities. More specifically, the benefits of human-robot interaction design done well include:

With thoughtful and effective HRI design, robots can assist with tasks that are repetitive, dangerous, physically demanding, or require high precision. For example, in industries, robots and humans can work side by side and share tasks safely in collaborative settings. And in healthcare, elder care, rehabilitation, or caregiving, robots can support mobility, daily tasks, or social interaction and enhance the quality of life and independence for many.

Robots designed with strong human-centered HRI principles are more likely to feel intuitive and comfortable to users, reducing (but not eliminating) feelings of awkwardness or alienness. With speech, gesture, or natural feedback, people can engage without steep learning curves. That ease of use fosters acceptance, trust, and more widespread adoption.

This natural interaction lowers barriers for diverse user groups, including people unfamiliar with robotics, elderly users, or individuals with limited mobility. Accessible design can meet HRI design in the form of robots that help users with disabilities, and also any user who can register their requests and commands through a variety of ways.

Pick up powerful points to design with, as this video explains how accessibility principles ensure that interactions are usable and intuitive for people with diverse abilities.

HRI allows a shift away from rigid, pre-programmed robots toward dynamic partners that adjust to human rhythm, habits, and context. That collaborative autonomy, where robot adapts to user behavior and environment, opens possibilities for more fluid, efficient human-robot teams across many domains: manufacturing, home, services, and public spaces.

Beyond pure functionality, human-robot interaction can address social and emotional needs. Robots that can recognize certain social and emotional signals (like facial expressions, tone of voice, or proximity) and respond with socially appropriate behaviors in well-defined contexts can serve in roles such as companionship, therapy, or customer service. They can help bridge accessibility gaps for people with disabilities, or support mental-health and social needs. Many people may feel emotional or psychological benefits from helpful robots once trust is established, though reactions will vary widely by person, culture, and context.

Overall, human-robot interaction encompasses a fascinating and increasingly relevant set of design challenges. It compels you to think beyond screens, buttons, or classical “interfaces.” It asks you to imagine robots not as tools but as partners: embodied, adaptive, empathetic agents that understand humans, act safely, and integrate naturally into daily life. And it promises to become even more exciting and opportunity-filled as some long-imagined robotic capabilities become practical, while many others remain speculative or long-term research goals.

Regarding how users and designers think about safety, autonomy, and trust in human-robot interaction, the core challenges are as much human as they are technical. And when you design HRI thoughtfully, with respect for human context, safety, dignity, emotion, and trust, you’ll help build a future where robots extend our capabilities, support our well-being, and collaborate with us in meaningful, human-centered ways. Excellence in HRI demands humility, care, and responsibility and the need to design for transparency, control, ethics, and real human lives.

Above all, in a world where humans and robots live and exist, it’s the humans who’ll need the primary focus. That’s why HRI UX design will always demand designers to look beyond the “nuts and bolts” of HRI design and keep a clear view of why people, with all their quirks and “irregularities,” choose to share a world with robots, who must cater to those human traits with all the insight and care designers can program into them. Their duty of care to the humans they serve becomes your duty of care to get the design right, long before it steps out into the world and does things your brand will be accountable for.

Delve deep into an exciting world of Artificial Intelligence and its related subjects with our course AI for Designers.

Enjoy our Master Class Human-Centered Design for AI with Niwal Sheikh, Product Design Lead, Netflix for a wealth of helpful insights.

Discover how to design more seamless experiences human users can enjoy, with our article No-UI: How to Build Transparent Interaction.

Explore a treasure trove of helpful insights to help your design career, in our article How Can Designers Adapt to New Technologies? The Future of Technology in Design.

HRI, or human-robot interaction, focuses on how people interact with robots, machines that act in the physical world, while HCI studies interactions with digital systems like apps or websites. Robots have bodies, autonomy, and the ability to sense and act in real space, which introduces new design challenges like movement, spatial awareness, and social cues.

HCI primarily involves screen-based interfaces, but HRI must account for embodiment, timing, and trust in shared environments. For example, a robot vacuum’s path planning must feel safe and predictable, unlike a static UI (user interface) where motion isn’t a factor. As a designer in HRI, you’ll also need to consider emotions, expectations, and the human-like behavior that people often project onto robots, even if the robot isn't truly intelligent.

Harvest some essential insights about Human-Computer Interaction (HCI) to improve your design understanding.

HRI is crucial as robots increasingly enter homes, hospitals, factories, and public spaces. Good UX design in this domain ensures robots feel trustworthy, understandable, and helpful, not intimidating or confusing. As machines become more autonomous, poor design can cause miscommunication, accidents, or emotional discomfort.

As a designer, you must shape how users understand robot intent, respond to its actions, and feel about ongoing interaction. For instance, a healthcare robot assisting elderly patients must balance authority with empathy, requiring thoughtful design of voice, gestures, and pace. When you understand HRI well, you’ll be in a position where you can lead innovation in service robotics, assistive tech, and collaborative automation, making interactions more humane, ethical, and inclusive, especially in high-stakes, real-world environments.

Speaking of hospitals, harvest some helpful insights about a vital dimension of user experience design, from our article Healthcare UX—Design that Saves Lives.

The main goals of HRI design include safety, clarity, trust, efficiency, and emotional comfort. Robots should act in ways humans can easily predict, interpret, and respond to.

So, you should aim to foster smooth, natural interaction, especially when robots share physical spaces with humans. Clear communication of intent is critical: for example, a delivery robot should signal when it’s turning or stopping. Emotional goals matter, too: robots often evoke human-like expectations, so their behaviors should match social norms without overpromising intelligence. Whether it’s through touch, voice, gestures, or spatial navigation, ensure users feel confident, respected, and safe during every interaction.

Access important insights to design with a better understanding of Artificial Intelligence (AI) with our article AI Challenges and How You Can Overcome Them: How to Design for Trust.

Core HRI design principles include predictability, legibility, transparency, feedback, and safety.

Predictability ensures users understand what the robot will do next, while legibility helps users interpret the robot’s current behavior, through motion, posture, or signals. Transparency builds trust by revealing decision-making or status in human-friendly ways. Robots must offer clear feedback so users know when commands are received or tasks are complete. Safety covers both physical safety and psychological comfort; robots should avoid abrupt motions, maintain appropriate distance, and respond calmly.

These principles must work together to support collaboration, reduce ambiguity, and meet user expectations. A well-designed HRI experience feels intuitive, respectful, and aligned with how people naturally communicate and act.

Explore how heuristics, including regarding feedback, can help guide you to design for better experiences.

Begin by studying human social behavior: movement, timing, gaze, and personal space. Use motion that’s smooth, purposeful, and paced to human expectations. Robots should avoid sudden gestures and make eye contact where appropriate.

Consider proxemics: people feel comfortable when robots respect physical boundaries. Also, mimic familiar social cues, like nodding or pausing before speaking, to make robots seem more relatable.

Behavior must match function: don’t make simple robots act overly expressive if they lack real intelligence. Consistency matters, too. Users trust robots more when their behavior is stable and easy to interpret. Another vital point is to test in real environments, as it’ll reveal what feels natural or unsettling, and help refine motion, posture, and response timing for realism and empathy.

Discover important points about a vital force connecting users with your product and brand, in our article Trust: Building the Bridge to Our Users.

You can adapt UX research methods like user observation, contextual inquiry, and usability testing for HRI. Observe how people interact with robots in real environments to understand expectations, emotional responses, and pain points.

Use think-aloud protocols to capture user reasoning. Run Wizard of Oz studies, where a human secretly controls the robot, to prototype interactions before full autonomy gets developed.

Surveys and interviews reveal user trust, perceived intelligence, or discomfort. Video analysis helps review nuanced behaviors. Consider longitudinal studies, too, since users’ trust and habits evolve over time. Combining qualitative and quantitative data gives insight into behavior, safety perception, and emotional engagement, critical for designing robots users accept and enjoy.

Find a firm foundation in user research to help boost your success in designing for human-robot interactions.

People interact with robots through a wide range of interfaces: voice commands, touchscreens, physical buttons, mobile apps, and increasingly through gestures or gaze. Multimodal interfaces, where multiple input types are used, are common and improve accessibility and flexibility. For instance, a user might start a task with voice and confirm it on a screen.

Robots may also use visual signals (lights, displays), sound cues (beeps, speech), or motion (body orientation, arm gestures) to communicate status or intent. The choice depends on context: industrial robots may rely on buttons and displays, while home assistants often use voice and lights. Good HRI design ensures interfaces match user expectations and task requirements and minimize confusion or overload.

Pick up some helpful design insights from our article How to manage the users’ expectations when designing smart products.

Yes, HRI offers powerful ways to enhance accessibility. Robots can assist with tasks like mobility, object retrieval, communication, or monitoring, especially for people with physical or cognitive disabilities.

Voice control and gesture-based input help users with limited mobility. Social robots can support therapy or provide companionship. For example, robots like PARO help reduce anxiety in dementia care, while robotic arms aid people with quadriplegia.

HRI allows for adaptive interfaces, too, where the robot tailors interaction style to user preferences or limitations. However, it’s essential to prioritize inclusive design, testing with real users, offering multimodal inputs, and ensuring robustness to different abilities. Done right, HRI can increase independence, dignity, and quality of life, and beautifully bring life-improving experiences home to many individuals.

Venture into Voice User Interfaces (VUIs) for a wealth of helpful insights to design with.

Combine inputs like voice, touch, and gesture based on context and user needs. Each mode should support the others: voice for commands, touch for control, gestures for spatial or emotional cues. Prioritize clarity: users must know when a robot is listening or responding.

Use visual or audio feedback to confirm actions. Avoid conflicting signals: synchronize modes to prevent overload. Design fallback options in case one mode fails. Consider user preferences, accessibility, and cultural norms, as gestures can vary widely. And test combinations in real settings to assess intuitiveness. When well-integrated, multimodal HRI allows for more natural, efficient, and inclusive interactions, especially in noisy, hands-busy, or multi-user environments.

For helpful insights into intuitive design, check out our article How to Create an Intuitive Design.

Emotion plays a key role in human-robot interaction, whether it’s genuine or perceived. Humans often anthropomorphize robots, expecting social cues like friendliness, empathy, or frustration. Robots don’t truly feel emotions, but expressive behavior, like tone of voice, facial expressions, or posture, can make interactions feel smoother and more engaging. For example, a robot that apologizes or hesitates when making a mistake appears more relatable.

However, be careful to avoid deceptive emotional cues that suggest intelligence or empathy the robot lacks. Ethical HRI balances emotional expression with transparency. Emotionally expressive robots can aid in healthcare, education, or therapy, building trust and motivation. Nevertheless, expression must match the robot’s capabilities and be honest, respectful, and context-aware.

Pick up timeless nuggets of wisdom to help your design efforts in our article The Key Principles of Contextual Design.

Key challenges include managing user expectations, designing intuitive motion, and balancing autonomy with control. People often overestimate robot intelligence, leading to confusion or disappointment. You’ll need to bridge the gap between perceived and actual capabilities.

Ensuring smooth, readable motion that feels natural is difficult, especially in unpredictable environments. Multimodal input adds complexity, especially when sensors or recognition systems fail. Ensuring accessibility, privacy, and ethical use raises further challenges.

Plus, you’ll need to address long-term trust: how does the relationship evolve over time? Testing in real-world settings is critical but resource-intensive. Successful HRI design requires interdisciplinary knowledge where you blend UX, psychology, robotics, and ethics to create safe, meaningful human-machine collaboration.

Find out how much of what users bring to their experiences hinges on their mental models of how things should be regarding the item they’re interacting with.

Safavi, F., Olikkal, P., Pei, D., Kamal, S., Meyerson, H., Penumalee, V., & Vinjamuri, R. (2024). Emerging frontiers in human–robot interaction. Journal of Intelligent & Robotic Systems, 110, Article 45.

This peer-reviewed review article explores three emerging themes in human–robot interaction (HRI): (1) human–robot collaboration modeled after human–human teamwork; (2) the integration of brain–computer interfaces (BCIs) to interpret brain signals for robotic control and communication; and (3) emotionally intelligent robotic systems that can sense and respond to human affect using cues like facial expression and gaze. The authors review techniques such as learning from demonstration, EEG-based control schemes, and affect recognition through multimodal signals. The article is significant for its systematic framing of these areas as central to the future of HRI and for highlighting the convergence of robotics with neuroscience and emotion AI.

Su, H., Wen, Q., Chen, J., Yang, C., Sandoval, J., & Laribi, M. A. (2023). Recent advancements in multimodal human–robot interaction. Frontiers in Neurorobotics, 17, Article 1084000.

This review article provides a comprehensive survey of multimodal human–robot interaction (HRI), where robots engage with humans through multiple simultaneous communication channels, such as speech, vision, touch, gesture, eye movement, and even bio-signals like EEG and ECG. The authors categorize input and output modalities and explore their applications in real-world interaction scenarios. They emphasize that multimodal HRI promotes more intuitive, natural, and robust robot behavior, especially in assistive and service robotics. The paper stands out for bridging HRI with cognitive science, affective computing, and Internet of Things (IoT) technologies. It’s a key contribution for defining trends and technical challenges in the design of responsive, socially integrated robotic systems.

Gunkel, D. J. (2018). The Machine Question: Critical Perspectives on AI, Robots, and Ethics. The MIT Press.

David J. Gunkel’s The Machine Question tackles the provocative ethical issue of whether intelligent machines, including robots and AI systems, deserve moral consideration. This philosophical inquiry challenges traditional frameworks of moral agency and patiency by examining the conceptual foundations of ethics through the lens of machine autonomy and consciousness. Drawing from philosophy, law, and cognitive science, Gunkel critically interrogates whether machines can be considered entities worthy of rights or responsibilities. The book has become a foundational reference in robot ethics and HRI, shaping discourse around responsibility, agency, and the future of human-machine coexistence.

Bartneck, C., Belpaeme, T., Eyssel, F., Kanda, T., Keijsers, M., & Šabanović, S. (2020). Human-Robot Interaction: An Introduction (2nd ed.). Cambridge University Press.

This is the leading introductory textbook on human–robot interaction (HRI), written by globally recognized experts from Europe, the US, and Japan. The book presents a well-rounded and multidisciplinary overview of HRI, covering technical systems, social and psychological theories, design practices, and evaluation methods. Now in its second edition, it reflects the most current advancements in HRI including ethical considerations, emotion modeling, and human-centered design. Suitable for students and professionals, it has been widely adopted in academic courses and is frequently cited in research. It remains one of the most comprehensive and accessible resources in the field.

Vinjamuri, R. (Ed.). (2024). Discovering the Frontiers of Human-Robot Interaction: Insights and Innovations in Collaboration, Communication, and Control. Springer.

Edited by Ramana Vinjamuri, this book presents cutting-edge research and emerging perspectives in HRI. It explores recent innovations in collaboration, brain-computer interfacing, emotional intelligence, trust, and robot teamwork. With contributions from leading international researchers, the volume captures the interdisciplinary breadth of current HRI research. It emphasizes real-world applications in healthcare, wearable robotics, and autonomous systems while also addressing foundational methodological and ethical issues. The book is valuable for researchers, engineers, and advanced students looking to stay on the frontier of HRI innovation. Its topical focus makes it especially relevant as robotics enter homes, industries, and public spaces.

Remember, the more you learn about design, the more you make yourself valuable.

Improve your UX / UI Design skills and grow your career! Join IxDF now!

You earned your gift with a perfect score! Let us send it to you.

We've emailed your gift to name@email.com.

Improve your UX / UI Design skills and grow your career! Join IxDF now!

Here's the entire UX literature on Human-Robot Interaction by the Interaction Design Foundation, collated in one place:

Take a deep dive into Human-Robot Interaction with our course AI for Designers .

Master complex skills effortlessly with proven best practices and toolkits directly from the world's top design experts. Meet your expert for this course:

Ioana Teleanu: AI x Product Design Leader (ex-Miro, ex-UiPath). Founder, UX Goodies.

We believe in Open Access and the democratization of knowledge. Unfortunately, world-class educational materials such as this page are normally hidden behind paywalls or in expensive textbooks.

If you want this to change, , link to us, or join us to help us democratize design knowledge!