As a designer, designing for trust in Artificial Intelligence (AI) products is paramount. AI presents unique challenges that require transparent interfaces, clear feedback, and ethical considerations to build user confidence. If you prioritize trust, you can ensure user adoption and satisfaction, which enhances the overall user experience in AI-powered products.

As AI Product Designer, Ioana Teleanu, talks about in this next video, AI can hallucinate! How can designers ensure our AI-enabled solutions are reliable and users can trust them? Let’s find out.

Show

Hide

video transcript

- Transcript loading…

Note: In the video, Ioana mentions Google Bard, which is now known as Google Gemini.

“We all fear what we do not understand.”

― Dan Brown, The Lost Symbol

The best way to build trust with our users is to be as transparent as possible (without overwhelming the user with too much technical information).

Clearly communicate:

Where does your system get its data? Indicate sources where possible.

What user-generated information does the system use? For example, does the system rely on other users to provide data?

How does your system learn from user data?

What are the chances of errors?

If your system relies on personal data (such as location data, demographic information or web usage metrics):

Always collect this information with full consent.

Ask users to explicitly opt-in to share information instead of asking them to turn off the setting.

Allow the user to use your solution without providing any personal data.

Characteristics of a Trustworthy AI System

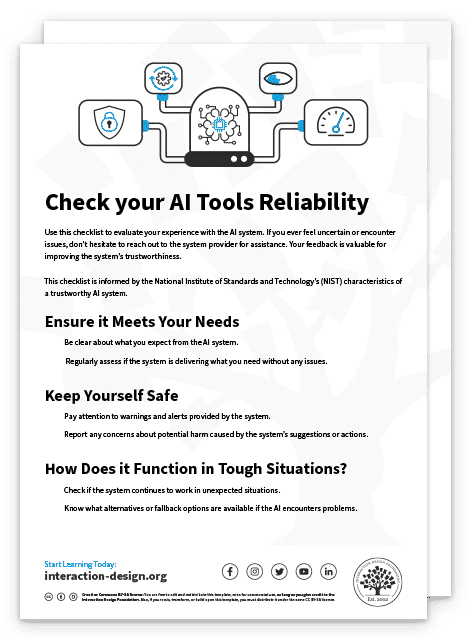

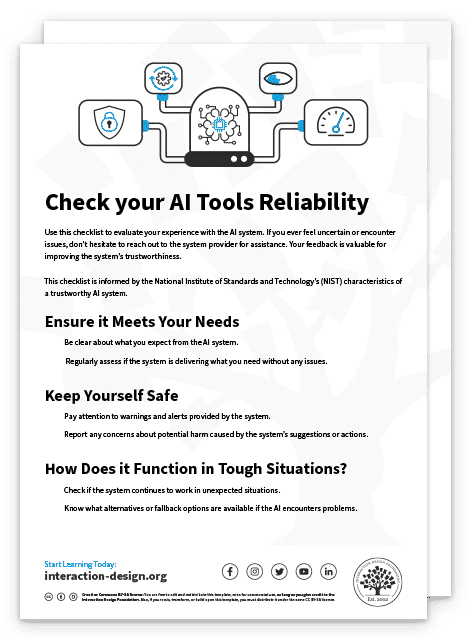

The National Institute of Standards and Technology (NIST) defines seven characteristics of a trustworthy AI system:

Valid and reliable: Validity refers to the system’s ability to meet user needs. Reliability refers to the system’s ability to keep performing, without fail. To ensure your AI products are valid and reliable, define success criteria and metrics to measure the performance of the system. Constantly assess the system to confirm it is performing as intended.

Safe: AI systems must never cause harm to their users. Rigorously test and simulate real-world usage to detect possible use cases where the system may cause harm and address them through design. Designers, data scientists and developers must work together to safeguard user safety. For example, you may prohibit a user from performing certain actions based on their age or location, or display warnings prominently.

Secure and resilient: A system is resilient if it can continue to perform under adverse or unexpected conditions and degrade safely and gracefully when this is necessary. For example, you might design a non-AI-based solution to allow the user to continue using the solution in case the AI system breaks down.

Accountable and transparent: Transparency refers to the extent to which users can get information about an AI system throughout its lifecycle. The more transparent a system is, the more likely people are to trust it. For example, the system can provide status updates on its functioning or information on its process so that people using the system can understand it better.

Explainable and interpretable: An explainable system is one that reveals how it works. The system can offer descriptions tailored to users’ roles, knowledge, and skill levels. Explainable systems are easier to debug and monitor.

Privacy-enhanced: Privacy refers to safeguarding users’ freedoms, identities and dignity. There is a tradeoff between enhanced privacy and bias. Allowing people to remain anonymous can limit the inclusive data needed for AI to function with minimal bias.

Fair with harmful bias managed: Fairness relates to equality and eliminating discrimination. Bias isn’t always negative. Fairness is a subjective term that differs across cultures and even specific applications.

Use this checklist to check the reliability of your AI tool.

Unsupervised: AI Art that Sidesteps the Copyright Debate

Generative AI can create stunning works of art. Unsupervised, part of artist Refik Anadol’s project, called Machine Hallucinations, is a generative artwork. The abstract images are driven by the Museum of Modern Art’s (MoMA) data, guided by machine learning and intricate algorithms, showcasing the intersection of art and cutting-edge AI research.

Anadol trained a unique AI model to capture the machine's "hallucinations" of modern art in a multi-dimensional space—data was collected from MoMA’s extensive collection and processed with machine learning models.

This project tackles the challenges of AI-generated art—it has huge potential for creative expression, but it raises concerns with transparency and ethics. Anadol's work invites a conversation about the interplay between art, AI research, and technology's far-reaching impact.

The art copyright debate centers on attributing creative rights in AI-generated artworks. Traditionally, copyright law is based on human authorship. Unsupervised addresses this issue by openly acknowledging the collaborative role of its AI model, StyleGAN2 ADA, in creating the art. This approach avoids copyright complexities by recognizing both the AI and the human artist, Refik Anadol, as co-creators. In doing so, Unsupervised fosters a shared authorship model, providing transparency and clarity in navigating the evolving landscape of art copyright for AI-generated works.

Show

Hide

video transcript

- Transcript loading…

The Take Away

In design, building trust with users is paramount—especially with AI, transparency plays a pivotal role. As designers, it's essential to clearly communicate various aspects, such as the data sources, how the system learns from user data, and the probability of errors.

A trustworthy AI system possesses several vital attributes. Firstly, it must be valid and reliable, meeting user needs and performing consistently. Safety is non-negotiable; rigorous testing is crucial to detect potential harm, and collaboration between designers, data scientists, and developers is vital to ensure user safety.

Accountability and transparency are achieved through regular status updates and clear insights into the system's processes. Explainability and interpretability make the system understandable, aiding in debugging and monitoring.

Privacy-enhanced AI respects users' privacy while managing biases, acknowledging the delicate balance between privacy and data inclusivity. Lastly, fairness, a nuanced concept varying across cultures, should be strived for, with careful management of biases to eliminate discrimination. As designers, understanding and implementing these principles are fundamental to crafting ethical and trustworthy AI systems.