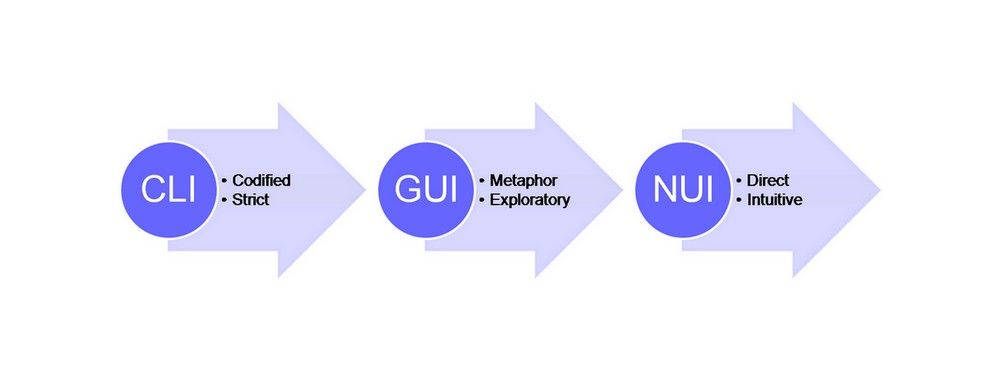

Natural User Interfaces – What does it mean & how to design user interfaces that feel naturaly

- 999 shares

- 5 years ago

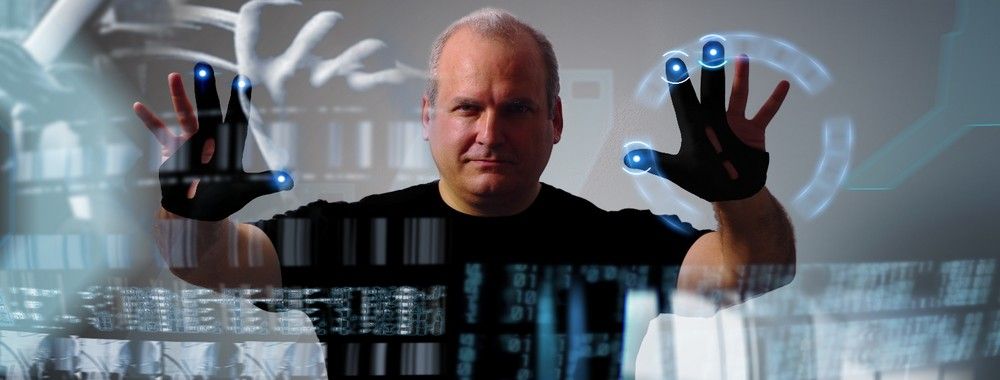

Gesture-based interaction is how users control digital systems using physical movements, such as hand swipes, pinches, or body gestures, instead of relying solely on taps, clicks, or keyboards. As a UX (user experience) designer, you can use gesture-based interaction to make interfaces feel intuitive, natural, and more human to users, empowering them to act as they would in the physical world, not just on a screen.

In this video, Frank Spillers, Service Designer, Founder and CEO of Experience Dynamics, shows you how simple, natural gestures can improve immersive interfaces while helping you avoid user fatigue.

Let’s start with a fun exercise to help envision the possibilities of designing gesture interactions. Imagine you’ve traveled into the future and find a hologram of a restaurant kitchen in front of you. According to the growling in your stomach, your “mission” is to order a pizza. And you can select a variety of “functions” to build your ideal culinary creation, like draw a circle with your finger to outline the size and then reach for and “lift” holographic toppings from the many trays on the “counter” and sprinkle as much on as you like.

The novelty of this may strike you as being like (VR) virtual reality, but you quickly notice you’re not wearing a headset. Interesting, but there’s more; for your pizza, you find you can indicate the thickness, or thinness, of the crust with a simple pinch of the space between your finger and thumb. Parmesan cheese? Just shake it on. And how soft or “fire-kissed” do you want your pizza? You might turn a temperature dial or use a motion like you would with garden shears to indicate oven-baked heat from a bellows (notice you’ve got two ways to do it). You instinctively know tapping your wrist twice means you want it as soon as possible. The holographic pizza changes color to indicate your choices are set, awaiting your final approval. A simple thumbs-up from you and it’s on its way (maybe via a food-grade 3D printer).

Enjoy this video, which explains how virtual reality evolved into the immersive medium that inspires today’s gesture-driven interactions.

While some aspects of pizza-making and ordering like this already exist, consider how intuitive and fun it might be to engage with some future technology like the above example. You can engage in an activity in a natural way where you don’t even realize you’re doing any “work”; for your pizza order, for example, you didn’t have to type anything (not even a credit card number; maybe you’ll have an account on file). Welcome to the world of gesture-based interaction, where you can shift from traditional UI (user interface) thinking with its buttons, menus, and forms, towards designing for movement, space, and embodied communication. This approach takes care, diligent testing, foresight, and empathy for users, so here’s a process to use as your guide:

You’ll need to begin with a solid foundation of who it’s all for, why they’ll adopt and love using it, and how they’ll perform tasks, achieve goals, and enjoy truly seamless experiences. So, start every project by clarifying who will use your interface, where, and for what purpose, and ask:

Will users benefit from fluid motion instead of precise taps?

Will they have free limbs (hands, arms, body) or have something constraining them (such as if they’re on a bus, carrying things, or out in public)?

Does the task involve spatial manipulation, navigation, or natural mapping (like swiping, grabbing, rotating)?

Use gesture-based interaction when it fits natural human behavior and the user’s environment. For example, in augmented reality (AR), immersive experiences, or hands-free contexts, such as smart homes, TV, and public kiosks, gestures often feel more natural than, for instance, typing. However, when tasks demand precision, privacy, or subtlety, such as typing a password, traditional controls may be better to stick with.

In this video, Frank Spillers explains how augmented reality uses spatial mapping to support natural, intuitive gestures that align with a user’s real environment.

Designing gestures means you’ll need to define a gesture set (known as a vocabulary) that users can learn, remember, and execute with ease. They’ll arrive on your product with preconceived ideas, mental models and conceptual models of how things should work. So:

Use natural physical metaphors such as pinching to zoom, swiping to scroll, and waving to dismiss. As gestures people already know from real life, they’re well worth implementing in future designs as users won’t even have to think about their meaning; they’ll just go ahead and make them.

Keep gestures simple and physical effort light: Don’t have users make large or strenuous motions; design for small, comfortable hand or finger movements to work well instead.

Ensure users can easily discover gestures: Provide visual or contextual hints so users know gestures exist. Hidden or undocumented gestures, even simple ones, can often go unused or come to light much later for users. Imagine how you might feel finding out by accident many months after using a product that a certain gesture does something you had to do using a long workaround.

Carefully evaluate gesture input properties, such as comfort, learnability, consistency, and robustness, under different conditions.

In this video, William Hudson, User Experience Strategist and Founder of Syntagm Ltd., explains how clear conceptual models help users understand your system, which is essential when you design gesture vocabularies that build on users’ existing expectations.

Gesture-based interaction is only possible when the system can reliably detect and interpret human movement. Your choice of hardware and sensing technology affects what gestures work, and how well, and options include:

Touchscreens and touchpads for basic gestures like tap, pinch, and swipe.

Depth-sensing cameras, infrared sensors, and motion trackers for midair gestures, full-body or hand tracking, such as in AR and VR, smart TVs, or contactless UIs.

Wearables or motion controllers when fine-gesture detection or finger-level precision is needed.

Make sure the recognition system handles variability: different hand sizes, lighting conditions, user posture, speed of movement, and accidental gestures. For example, think of the potential for error and frustration if a user accidentally triggers a system response when all they meant to do was scratch an itch. Or what if your system didn’t register their movement the first time and they have to make a second or third, more exaggerated gesture? Well-designed gesture recognition depends on robust sensing, accurate modeling, and testing across real-world scenarios, so be sure to account for all three.

Because gestures often involve invisible or midair movements, users need immediate, understandable feedback to feel in control. When you design gesture-based interaction:

Visual or auditory cues (icons, highlights, and sound) help users know the system recognized their gesture successfully.

Provide real-time response as lag or misrecognition breaks the illusion of natural interaction; even a tiny fraction of a second can make all the difference between delight and frustration.

Indicate possible actions: for example, with overlay hints or subtle animation that invites gestures. Affordances, which you’ll find in the physical world in the form of door handles, for example, need to help users in their digital experiences, too.

This kind of feedback helps bridge the gap between physical motion and digital reaction, which makes gesture interaction feel natural and reliable for the user, who’ll feel more engaged in a seamless experience because of your considerate and empathetic design.

Enjoy this video, which explains how clear affordances and signifiers help users understand what actions are possible, which is essential for making gesture-based interfaces feel responsive and trustworthy.

Since gesture-based interaction blurs the line between physical behavior and digital response, it makes testing in real contexts critical. A world filled with users with a diversity of abilities and more means you should include people with different body types, abilities, and use contexts. Think about lighting, seating, posture, and the various situations different people may encounter your design solution in. So, be sure to test for:

Accuracy and error rates, which includes testing for false positives and false negatives.

Physical fatigue or discomfort over time. Gesturing constantly, especially using midair, large, or high gestures, can cause fatigue or discomfort, so pick gestures that permit comfortable, repeatable motions. For example, just because a user doesn’t have to make a grand-sweeping arc or karate-chop the air in front of them doesn’t mean making many far gentler, less dramatic movements in a day won’t wear them out or cause some kind of muscle strain.

Discoverability and learnability: Do users discover gestures and remember them? Make them ultra-easy for users to find, learn, and take to.

User satisfaction: Does gesture interaction feel natural and usable compared with traditional interfaces? Does it bring users enough enjoyment, novelty, and emotional engagement, and help make a seamless experience all the better and more pleasurable?

A powerful way to stay one step ahead of your users and come out of testing with better results is to design using personas, research-based representations of real users. You can plug these personas into your design process, including through design mapping to shed light on even more valuable insights to help fine-tune your design solution.

In this video, William Hudson explains how design maps connect your personas to key design elements so you can make more informed design decisions.

In certain contexts, gesture-based interaction can support accessibility; for example, by offering alternative input modes to users who have difficulty with keyboards or touchscreens. Even so, gestures can also present challenges for people with motor disabilities, so accessibility benefits depend on the specific user needs, gesture design, and system capabilities. Therefore:

Offer alternative input methods, such as buttons, voice, and touch, alongside gestures.

Support adjustable sensitivity, gesture customization, or gesture disabling for comfort and accessibility.

Consider motor disabilities, cultural differences, and user preferences when defining gesture sets.

In this video, Alan Dix, Author of the bestselling book “Human-Computer Interaction,” and Director of the Computational Foundry at Swansea University, explains how cultural differences shape what people can perceive and understand in an interface, reminding you to design gesture alternatives that respect diverse abilities and contexts.

Copyright holder: Tommi Vainikainen _ Appearance time: 2:56 - 3:03 Copyright license and terms: Public domain, via Wikimedia Commons

Copyright holder: Maik Meid _ Appearance time: 2:56 - 3:03 Copyright license and terms: CC BY 2.0, via Wikimedia Commons _ Link: https://commons.wikimedia.org/wiki/File:Norge_93.jpg

Copyright holder: Paju _ Appearance time: 2:56 - 3:03 Copyright license and terms: CC BY-SA 3.0, via Wikimedia Commons _ Link: https://commons.wikimedia.org/wiki/File:Kaivokselan_kaivokset_kyltti.jpg

Copyright holder: Tiia Monto _ Appearance time: 2:56 - 3:03 Copyright license and terms: CC BY-SA 3.0, via Wikimedia Commons _ Link: https://commons.wikimedia.org/wiki/File:Turku_-_harbour_sign.jpg

Far into the future, gesture-based interaction will likely coexist with touch, voice, and other inputs, each used where it makes the most sense. When you design gesture-based interaction properly, you unlock a range of advantages for user experience, engagement, and future-ready, future-proofed interfaces. The benefits of gesture interaction design done well include some powerful reasons to stick with it, such as:

Humans have used gestures since prehistoric times, long before the first typewriters “decided” how your smartphone screen’s keypad looks. By leveraging gestures, something users already know, you reduce friction and increase approachability. Instead of having to learn new UI conventions, users choose hand and body movements they instinctively understand with spatial reasoning. Signaling with gestures makes interfaces feel more human, not machinelike.

Gestures, such as swiping or pinching, can reduce the number of steps required for frequent tasks and may feel faster or more fluid in certain contexts. Note, though, that their efficiency depends on factors like gesture recognition accuracy, system latency, task complexity, and user familiarity.

Gesture-based interaction often feels more embodied and playful than traditional interfaces; physicality, immediacy, and novelty add layers of enjoyment, expressive ability, and personality. This emotional appeal can boost satisfaction, encourage exploration, and raise long-term user engagement as users get deeper into more delightful experiences in more expressive ways than just typing.

Gesture-based input can serve as an alternative interaction method for users who find traditional inputs like keyboards or touchscreens difficult to use. However, for users with certain motor or cognitive disabilities, gesture input may introduce usability challenges unless you specifically design them with accessibility in mind. In any case, and as accessible design is the law in many parts of the world, too, accessible design is a particularly powerful consideration to “bake” into your interaction design process. Pick up some essential insights as this video explains why accessible design ensures that digital experiences remain usable and enjoyable for people with disabilities and other users, too.

As computing moves beyond phones and laptops and into AR/VR, smart environments, wearables, and ambient computing, gesture-based interaction design offers a path forward. It suits contexts where screens are inconvenient, unavailable, or inappropriate. While you might look at your smartphone’s screen with some disbelief on reading that, consider what UX design might look like in 20, 30, or even 40 years’ time. Sure, screens will likely still be essential ways to interact in many cases, but consider the exciting advancements of in-car systems, smart home control, and immersive spaces available now, too.

Exciting times lie ahead. Remember to design for thoughtfully integrated interactions, rather than “cool add-ons,” so users don’t feel that your interaction design based on gestures is gimmicky, intrusive, unnecessary, or too complex.

Overall, gesture-based interaction challenges you to think of interfaces not as flat canvases with buttons, but as entire living spaces of movement, physical intuition, and human behavior. When you design intentionally, interaction based on gestures becomes a powerful way to make technology feel natural, human, and alive.

Therefore, investigate how this form of interaction may help you design products that can impress and keep users coming back long into the future. Since gestures are timeless and intuitive, they’ll soon become more of a design reality with many more applications featuring this mode of interaction. Instead of featuring in science-fiction stories set a lifetime away, they’ll be within the grasp of so many more users, be it to order pizzas with, search for items, enjoy more realistic games: wherever you can take them, designer.

Discover a vast fund of helpful design guidance in our course UX Design for Augmented Reality.

Enjoy our Master Class How to Innovate with XR with Michael Nebeling, Associate Professor, University of Michigan.

Enjoy our Master Class How To Craft Immersive Experiences in XR with Mia Guo, Senior Product Designer, Magic Leap.

Explore essential insights to design for gesture-based interactions, with our article How to Design Gesture Interactions for Virtual and Augmented Reality.

Discover further helpful points to help you understand and apply gesture interaction design insights, with our article Innovate with UX: Design User-Friendly AR Experiences.

The most common gestures include tap, double-tap, swipe (left, right, up, down), pinch (in and out), drag, long press, and rotate. These gestures let you interact quickly and intuitively with content. For example, you can swipe to scroll through a photo gallery or pinch to zoom into a map.

Tap and double-tap remain the most universal, especially for navigation and selection. Voice and motion gestures appear in newer devices, too, like smart TVs or AR (augmented reality) interfaces. When you’re designing, use standard gestures for familiar actions, like swipe to delete or drag to reorder, so users don’t have to relearn basic interactions. And limit the use of custom gestures unless absolutely necessary, always making sure they’re easy to discover and remember.

Find a treasure trove of helpful insights for your designs, with our article Beyond AR vs. VR: What is the Difference between AR vs. MR vs. VR vs. XR?.

Gesture interaction involves movements like swiping, pinching, or dragging, while touch interaction typically refers to basic actions like tapping or pressing a button. Gestures often trigger context-sensitive actions, like zooming or navigating, whereas touch is usually about direct selection.

Gesture-based interfaces rely more on spatial awareness and muscle memory, which can make them faster but potentially harder to learn. They feel more natural once you get used to them, but they often lack visible cues. Touch interactions, on the other hand, offer clear affordances like buttons or links. Use gestures to streamline frequent tasks, but always pair them with visual cues or fallback options to make the experience more intuitive, especially for new users.

Explore important points about touch and tactile interaction in the Encyclopedia of Human-Computer Interaction, 2nd Ed. entry for Tactile Interaction.

Gesture interaction can make apps feel faster and more fluid. It reduces clutter by removing the need for on-screen buttons, which creates more space for content. Once you learn gestures, you can perform complex tasks with just a few quick movements, such as swiping to archive an email or pinching to zoom into a photo. These shortcuts improve efficiency and make digital experiences feel more physical and natural. They let you use one hand, too, which is perfect for mobile use.

Plus, gesture control enhances immersion, especially in gaming, VR, and AR. To maximize these benefits, ensure your gestures are consistent, responsive, and supported by subtle visual or haptic feedback.

Veer into virtual reality for a wealth of helpful tips and insights to design with.

Gestures often lack visibility; as you don’t see a button, you might not know an action exists. This hidden nature can make gestures hard to discover and remember, especially if they’re custom or inconsistent. You can also trigger them by accident, which causes frustration.

Accessibility poses another issue; people with motor or certain other disabilities may struggle with precise gestures like swiping or pinching. Gestures can feel unintuitive across devices, cultures, or even hand sizes.

To reduce these risks, avoid overloading your interface with gestures. Combine gestures with visible UI (user interface) elements and offer onboarding or reminders. Test thoroughly across diverse users and contexts to ensure they’re truly usable.

Discover an important realm of design considerations regarding accessibility and why you should bring it on board all design solutions you create.

Design gestures around familiar real-world actions. Swiping feels like turning a page; pinching mirrors zooming with a camera lens, for instance.

Stick to platform conventions; users expect similar gestures to work across apps. Limit gestures to high-value actions and avoid inventing complex or unique movements unless necessary. Support them with subtle animations, tooltips, or hints to show what’s possible.

Consistency is key: use the same gesture for the same action throughout your app. Always provide visual feedback, like highlighting an item when dragging. And conduct usability tests to ensure users understand and remember the gestures without tutorials. Simplicity, familiarity, and clear feedback make gestures more intuitive and memorable.

Command a greater grasp of design know-how, with our article Consistency: MORE than what you think.

To avoid accidental gestures, define clear gesture zones and use intentional actions like long presses or double taps instead of single, easy-to-trigger gestures. Add a slight delay before triggering a gesture to confirm the user’s intent. Don’t overload similar gestures: don’t assign different functions to up and down swipes on the same element unless users can easily tell them apart. Provide visual or haptic feedback during interaction to show that the system recognized the gesture. Use animations or transition cues to reduce confusion. Last, but not least, test across various hand sizes, devices, and real-world contexts to catch edge cases.

Explore an essential area to help guide your designs with when you cater to users’ contexts of use.

Visual feedback confirms that your gesture worked. It reduces uncertainty and helps you understand how your actions affect the interface. For example, when you drag an item, it should follow your finger. If you swipe to archive, a color or icon should appear to signal what will happen.

Without feedback, you’re left guessing, which frustrates users and leads to mistakes. Feedback should be fast, consistent, and subtle; don’t distract from the task. Use animations, highlights, shadows, or haptic cues to reinforce the interaction. This feedback helps build confidence, reinforces mental models, and supports learning over time. Remember, it’s not just decoration; it’s essential communication, first and foremost.

Harvest a healthy wealth of insights about haptic interfaces and how to accommodate users appropriately with feedback they can feel.

Make gestures inclusive by supporting a wide range of motion and offering alternatives. For users with limited mobility, include tap-based or voice options. Allow gesture customization when possible, especially in accessibility settings. Keep gesture areas large enough to prevent precision issues, and avoid time-sensitive interactions.

Also, provide clear visual cues so users can understand available gestures without trial and error. And support assistive technologies like screen readers and voice control. Test your gestures with real users who have different abilities and devices. Accessibility-first design ensures everyone can interact with your app, and it often improves usability for everyone else, too.

Access the world of assistive technology to get a greater understanding of how many users encounter and interact with products, services, and the brands behind them.

Gestures can carry different meanings across cultures. A “thumbs up” might signal approval in one region and be offensive in another, for example. The direction of swipes can matter, too: some cultures read right to left, which can influence expectations. Users from different backgrounds may interpret gestures differently or find some interactions unnatural.

When you’re designing, research your target audience’s cultural norms and avoid gestures with conflicting meanings. Stick to universally accepted actions like tap, swipe, or pinch whenever possible. If your app targets global users, include regional gesture preferences and support localization. Cultural sensitivity prevents misunderstandings and makes your app feel more welcoming in more places.

Discover helpful points about how to Design for Other Cultures in our article discussing this essential design consideration.

Hidden gestures with no hints top the list: users can’t use what they don’t know exists. Another mistake is using the same gesture for multiple actions, which confuses users. Overloading gestures, or adding too many, is another problem and makes the interface harder to learn.

Lack of visual feedback or delayed responses creates frustration, as well. Some apps disable gestures on certain screens, breaking consistency. And complex or non-standard gestures take too much effort to learn.

Avoid these pitfalls by keeping gestures simple, consistent, and discoverable. Always provide feedback and design fallback options. And test with real users and refine based on their struggles, not assumptions.

Find your way to easier-to-use design solutions by understanding discoverability and why it’s essential.

Use short, interactive onboarding to introduce key gestures. Show a quick demo, such as an animation or overlay, that explains what to do and why it matters. Reinforce gestures contextually: for example, show a “swipe to delete” hint the first time a user hovers near a list item.

Use progressive disclosure, where you introduce gestures gradually instead of all at once. If possible, let users practice gestures safely in a tutorial mode. Provide reminders for underused gestures and include a help or tips section. And don’t rely on gestures alone; pair them with buttons or labels when users might miss the interaction. Teaching gestures should feel seamless, not like doing homework.

Peer closer at progressive disclosure for a wealth of helpful tips on how to ease users into your designs.

Vatavu, R.-D. (2020). “Gesture-Based Interaction.” In Interaction Techniques and Technologies in Human-Computer Interaction (pp. 155–178). CRC Press.

(Note: this is a chapter, but one of the most authoritative on gesture-based interaction.)

In this well-regarded chapter, the author dives deeply into what “gesture-based interaction” really means: the nature of human gestures, gesture types (e.g., static vs dynamic, body vs finger gestures), domains of application, and the technical underpinnings (recognition, representation, engineering). It addresses important design challenges such as fatigue, discoverability (how users know what gestures are available), and accessibility for users with varying sensory or motor capabilities. Because it bridges both design and engineering, it's a go-to resource for anyone designing gesture-based UX.

Oudah, M., Al-Naji, A., & Chahl, J. (2020). Hand gesture recognition based on computer vision: A review of techniques. Journal of Imaging, 6(8), 73.

This review surveys computer vision–based techniques for hand gesture recognition, covering static and dynamic gestures, segmentation strategies, and recognition methods such as SVMs, HMMs, and CNNs. It compares vision-based techniques to glove-based sensing and evaluates challenges like lighting conditions, occlusions, and computational cost. With over 500 citations, it’s a key resource that has helped standardize understanding in this field. Its value lies in its comprehensive synthesis and accessibility, serving as a foundation for researchers and UX professionals working on real-time, camera-based gesture interaction.

Wang, Z., Zhang, H., & Fan, C. (2023). Research progress of human–computer interaction technology based on gesture recognition. Electronics, 12(13), 2805.

This article provides a comprehensive survey of gesture recognition technologies for human-computer interaction. It outlines diverse input modalities, including camera-based vision, radar, electromagnetic sensors, and electromyography (EMG), and evaluates their application in fields like smart homes, assistive tech, and healthcare. It also discusses recognition challenges such as cross-device variability, wearability, and robustness.

Pasquale, T., Gena, C., & Vernero, F. (2024). An empirical evaluation for defining a mid-air gesture dictionary for web-based interaction. arXiv.

This study explores which mid-air gestures users find intuitive for typical web tasks. Through a large-scale user study (248 participants), the authors derive a consensus-driven “gesture dictionary” for web-based actions like navigation and selection. The research highlights gesture mappings that users intuitively prefer, supporting more user-centered design for touchless interfaces. This empirical grounding is key for UX designers aiming to improve gesture discoverability and reduce cognitive load in web contexts.

Xu, P. (2017). A real-time hand gesture recognition and human-computer interaction system. arXiv.

This paper presents a complete, real-time gesture-based HCI system using a monocular camera. It combines CNN-based gesture recognition with Kalman filter–based pointer control to enable touchless mouse and keyboard simulation. The system achieves low-latency interaction without the need for specialized sensors. Widely cited, it’s often referenced in engineering applications and DIY gesture system builds. Its significance lies in demonstrating that affordable, non-specialized hardware can deliver robust gesture interaction, making it especially relevant for developers and UX teams prototyping low-cost touchless interfaces.

Niu, P. (2025). Convolutional neural network for gesture recognition human-computer interaction system design. PLOS ONE, 20(2), Article e0311941.

Niu proposes a novel CNN model optimized for hand gesture recognition in human-computer interaction. The architecture integrates multi-scale convolution and ELU activation functions to enhance feature extraction while maintaining computational efficiency. The model shows impressive accuracy on NUS-II (92.55%) and ASL-M (98.26%) datasets. This work is important because it balances accuracy and efficiency, enabling gesture interaction systems that are fast, precise, and deployable in real-time applications such as AR/VR and accessible computing.

Kaushik, M., & Jain, R. (2014). Gesture based interaction NUI: An overview. arXiv.

This early overview captures the emergence of gesture-based interaction within Natural User Interfaces (NUIs). It examines gesture types (static vs. dynamic), sensing technologies (camera-based, glove-based), and input contexts (2D touch, 3D motion, full-body). The paper remains well-cited and historically important, capturing the optimism and challenges of early gesture interface adoption. It provides useful context for researchers tracing the evolution of gesture HCI or benchmarking the field’s progress from the 2010s to the present.

Maher, M. L., & Lee, L. (2017). Designing for Gesture and Tangible Interaction. Springer.

This book provides a focused, design‑oriented exploration of two “embodied interaction” modalities: tangible interaction and gesture‑based interaction. The authors reconceptualize traditional input mechanisms, for instance treating a keyboard as a “Tangible Keyboard” or 3D models as “Tangible Models.” In the gesture‑interaction section they present design scenarios such as walk‑up‑and‑use public displays and gesture‑driven dialogue metaphors, and derive interaction principles grounded in cognition, affordances, and embodied metaphor. The volume concludes with a call for further research into cognitive effects of embodied modalities. For any UX designer or researcher building beyond the WIMP paradigm (windows/icons/menus/pointer), this is a foundational, credible resource.

Stephanidis, C., & Salvendy, G. (Eds.). (2024). Interaction Techniques and Technologies in Human‑Computer Interaction. CRC Press.

This edited volume offers a broad, up-to-date survey of interaction design techniques and emerging interface modalities, including conventional input (keyboard/mouse), as well as newer forms like gesture-based interaction, wearable interfaces, haptic/tactile systems, voice, embodied & tangible interaction, and even extended-reality and brain–computer interfaces. The book’s “Gesture‑Based Interaction” and “Tangible and Embodied Interaction” chapters situate gesture and physical-body–mediated interaction within a wider HCI ecosystem, helping readers decide when each modality is appropriate given context, device, user needs, and trade‑offs. For designers, researchers, and students of HCI, this volume serves as a modern reference to navigate the growing complexity of interaction methods available today.

Cardoso, A. P., Perrotta, A., Silva, P. A. P., & Martins, P. (Eds.). (2023). Advances in Tangible and Embodied Interaction for Virtual and Augmented Reality. MDPI / Electronics Book Series.

This edited collection synthesizes recent research on tangible and embodied interaction, particularly in the context of immersive environments (virtual and augmented reality). It covers how physical affordances, object manipulation, body‑based gestures, spatial interaction, and embodiment can be leveraged to create more natural, intuitive, and immersive user experiences where “bits” meet “atoms.” The book is especially relevant, as AR/VR headsets, spatial computing, and embedded interactive systems become more mainstream, and designers must reconcile the physical and digital, ensuring gesture and touch feel meaningful, ergonomic, and cognitively grounded. The volume offers both theoretical frameworks and concrete case studies, making it a valuable reference for practitioners and researchers focused on embodied/ spatial UIs.

Remember, the more you learn about design, the more you make yourself valuable.

Improve your UX / UI Design skills and grow your career! Join IxDF now!

You earned your gift with a perfect score! Let us send it to you.

We've emailed your gift to name@email.com.

Improve your UX / UI Design skills and grow your career! Join IxDF now!

Here's the entire UX literature on Gesture-Based Interaction by the Interaction Design Foundation, collated in one place:

Take a deep dive into Gesture Interaction with our course UX Design for Augmented Reality .

Master complex skills effortlessly with proven best practices and toolkits directly from the world's top design experts. Meet your expert for this course:

Frank Spillers: Service Designer and Founder and CEO of Experience Dynamics.

We believe in Open Access and the democratization of knowledge. Unfortunately, world-class educational materials such as this page are normally hidden behind paywalls or in expensive textbooks.

If you want this to change, , link to us, or join us to help us democratize design knowledge!