No-UI: How to Build Transparent Interaction

- 869 shares

- 2 years ago

No-UI (no-user interface) design is an approach where you design digital experiences that don’t rely on traditional screens or visual interfaces. Instead of tapping, swiping, or clicking, users interact through voice, sensors, automation, or context-aware systems. You can use no-UI principles to create seamless, invisible interactions that help people achieve goals with less effort and distraction.

In this video, Alan Dix, Author of the bestselling book “Human-Computer Interaction” and Director of the Computational Foundry at Swansea University, helps you recognize the many computers surrounding you to show why no-UI design relies on invisible, embedded technology.

To design no-UI interactions effectively, you need to shift from visual control to contextual assistance. This doesn’t mean removing all interfaces; it means removing unnecessary interfaces. Here’s how to do it.

Start by defining what the user wants to achieve, not just what they’ll do.

It’s essential that you understand the context of use and what’s going on around the user as they want to get something done, too. In “traditional” UI design, there’s often a focus on micro-interactions with buttons, sliders, and menus. However, in no-UI design, the need to focus on outcomes becomes all the more important.

For example, instead of designing an app to adjust a thermostat, design a system that keeps the room comfortable automatically based on presence, preferences, and environmental conditions.

Ask questions like:

What does the user want to accomplish?

Can the system anticipate this need based on behavior, location, time, or environment?

Can the task be triggered passively or contextually?

In this video, Alan Dix shows you how understanding the user’s full context helps you design no-UI systems that anticipate needs rather than rely on screen-based interactions.

Smart design with no-UI design is at its best when users don’t need to do much. So, use sensors, ambient data, and intelligent defaults to reduce or eliminate input. If the system can infer what the user needs without a direct command, then do it.

Speaking of smart, consider staying a step ahead of your user’s needs. For instance, instead of requiring users to open an app to turn on smart lights, let lights activate when the user enters a room, using motion detection, geofencing, or time-based routines.

Use passive triggers like location awareness (via GPS or beacons), environmental sensing (light, sound, temperature), and behavioral patterns (time of day, routine).

No-UI design doesn’t mean no interaction, and it certainly doesn’t signal that no UI designers are needed. It means the interaction happens through more natural means: voice, gesture, proximity, or automation.

So, design these interactions to feel like part of the environment:

Voice commands for controlling smart devices.

Wearables that respond to motion or biometric data.

Cars that adjust seat settings based on the driver’s profile.

Build interactions that feel invisible, contextual, and human, not button-driven. Your goal is to reduce friction, not functionality; keep users feeling calmly empowered.

Users need clarity, and maybe the need for clarity is greater when there’s no screen to tell them things. Users need to know what’s happening, what caused it, and how to change it. So, use:

Audio cues (a chime or spoken feedback)

Haptic feedback (vibrations or physical response)

Simple verbal confirmations (“Lights off” after a voice command)

Clear status signals (a light ring, an animation, or a short tone: whatever’s appropriate)

Feedback is essential, so always confirm when actions happen, and give users control to override or correct the system. Transparency builds trust and helps take angst and fear out of the relationship between human and system. Consider, for example, going on vacation and wondering briefly if you did, indeed, turn off the closet light. For a moment, you’re unsure, and then feel a surge of relief from remembering the friendly “Closet light off” voice.

In this video, Alan Dix shows you how haptic and tactile cues can give users clear, reassuring feedback when no screens are available.

Copyright holder: On Demand News-April Brown _ Appearance time: 04:42 - 04:57 _ Link: https://www.youtube.com/watch?v=LGXMTwcEqA4

Copyright holder: Ultraleap _ Appearance time: 05:08 - 05:15 _ Link: https://www.youtube.com/watch?v=GDra4IJmJN0&ab_channel=Ultraleap

Automation is powerful, but it’s risky if overdone. Remember the purpose is to enhance lives, never to do harm. Don’t take away user agency. Instead, give users the ability to opt in, override, or customize. So:

Let users set preferences and boundaries.

Offer fallback options, such as voice + app + physical switch.

Allow learning systems to adjust gradually, with feedback.

For example, consider a fridge that auto-orders groceries. Now consider a user who decides to try a vegan diet. Or how about a user who just lost their job and needs to economize more? To be a truly “smart” fridge under those circumstances, it should first confirm user preferences and budget constraints and then provide easy ways to cancel or adjust.

As no-UI designs aren’t things you can test with mockups alone, you’ll need to simulate context: the physical environment, user behavior, and sensor data. So, use:

Wizard-of-Oz prototype testing (where you fake automation behind the scenes).

Role-playing scenarios in realistic environments.

Prototyping tools that simulate voice or sensor input.

Test how users react when there’s no screen. Can they understand what’s happening? Can they get what they need quickly?

Watch and find out how paper prototypes and simple Wizard-of-Oz methods help you test no-UI ideas quickly and realistically.

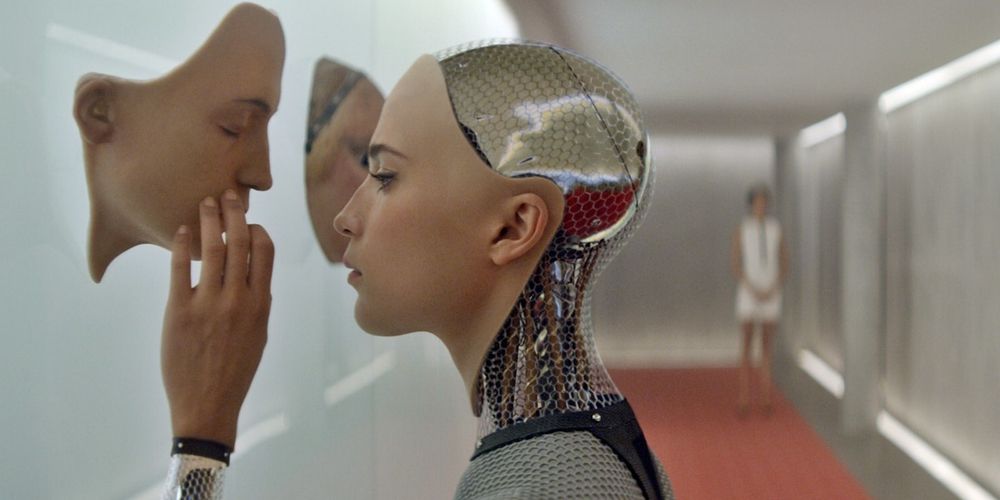

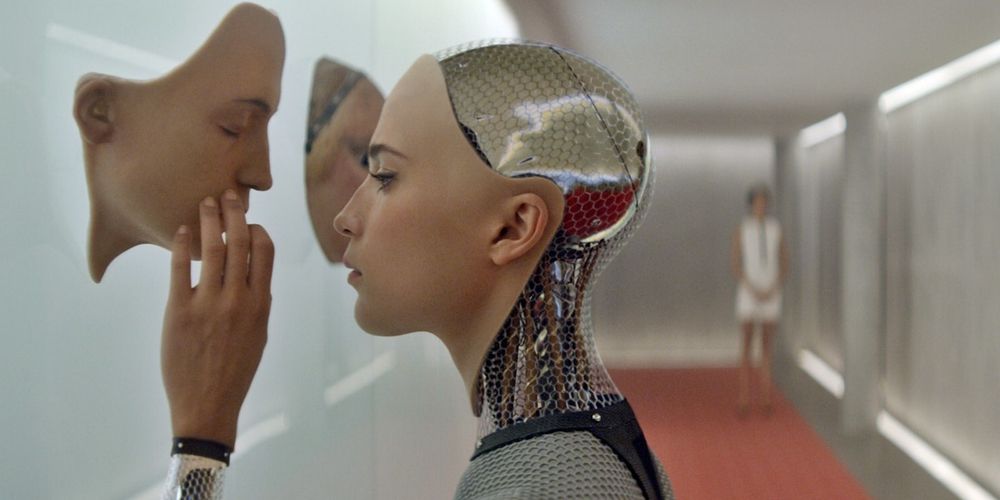

Many no-UI systems rely on data collection, ambient monitoring, or AI (artificial intelligence) decision-making. For all the empowerment and convenience modern devices offer, consider how sensitive an issue like AI decision-making can be. Dystopian ideas of environments in which walls have “ears” and all-seeing electric eyes relay information to interested watchers who track people’s every move may sound like science fiction. However, think about where those fears might come from. Fear lies in the unknown murk of illicit acts like hacking, of not knowing what’s going on, or even of unethical design to begin with. Trust and transparency are, therefore, vital, and you must build for privacy, transparency, and dignity. So:

Always inform users what data is collected and why.

Provide opt-in choices, not just opt-outs.

Respect context; don’t use microphones or cameras without clear purpose and consent. This is especially important, as fears of being recorded can cause significant anxiety and distress, particularly when users don’t know who might access their words or images, or where that data could end up. If you wouldn’t want it done to you, don’t do it to others.

Avoid interactions that feel invasive or creepy (like talking ads or always-listening devices without user control).

To a “traditional” design mindset, a lack of user interface might at first seem daunting, like something that seems to work against the idea of empowering users. For a rough analogy, imagine climbing into a car’s driver’s seat and finding no steering wheel or visible controls whatsoever. Fortunately, the state of the art of UX design has already made great strides away from the confines of having to use just one means of interaction.

Visual-only UIs still abound, but design has expanded to VUIs (voice user interfaces) and beyond, such as gesture-based designs. For example, consider the convenience Amazon’s Alexa introduced and how the Google Nest helped bring sophisticated user experiences home to users in the 2010s. As people possess more and more devices with computers in their homes, and ubiquitous computing and calm computing become more prevalent, the potential and need for no-UI design has risen, and will continue to.

Designing no-UI experiences offers transformative advantages. Done well, it removes friction, respects attention, and brings technology closer to human behavior. More precisely, the benefits of no-UI design include how it:

No-UI designs feel natural when designed well. Instead of navigating menus or apps, users interact through voice, motion, proximity, or passive context, which makes everyday tasks faster and smoother.

Think about how it feels when your smart assistant plays your favorite music when you walk in, or your coffee maker starts brewing when your alarm goes off. Magic? Not really; it’s just good no-UI design.

You don’t need to step into a time machine to compare life from 20 or 30 years ago to now to understand how overwhelmed people are by screens and constant inputs. No-UI lets you design experiences that give users back their attention and a sacred slice of “quiet” that seems, for many of us, to have been cut out by modern living much of the time. It offloads decision-making, reduces the need to focus on interfaces, and blends into the background.

That leads to better focus, less stress, and more satisfying interactions with technology, and with your brand.

Not everyone can, or wants to, interact through screens. No-UI opens up possibilities for users who have vision disabilities, have limited dexterity, or are busy doing other things. Accessible design is serious business for a reason, but it’s a good idea not just for the potentially legal dimensions of not designing for accessibility. For example, consider how:

Voice interfaces help users with visual disabilities.

Gesture controls support hands-free access.

Automation helps older adults or people with cognitive disabilities.

Multi-modal interaction lets people choose how they engage.

Designing to accommodate these helps all users; when you remove the visual barrier, you make room for everyone.

Pick up valuable insights as this video explains how accessibility principles ensure that every user, including those with disabilities, can interact smoothly and confidently with your designs.

No-UI systems often skip steps entirely. Instead of launching an app, logging in, choosing a menu, and adjusting a setting, a single phrase or motion can complete the task. That makes everyday routines simpler and faster; plus, it can enhance safety in hands-busy or time-sensitive environments. For example, when was the last time you wished you had an extra hand while cooking, or realized that you dare not risk taking your eyes off the road for even a split second or a hand off the steering wheel while driving?

As devices become further embedded in our homes, cars, clothing, and cities, traditional GUIs (graphical user interfaces) don’t scale. No-UI principles allow interaction to extend beyond screens, to the world itself.

This supports Mark Weiser’s vision of ubiquitous computing: technology that fades into the background, helping quietly when needed. And that’s the essence of ambient, adaptive interaction.

No-UI design challenges the old idea that screens and buttons are always the best way to interact with technology. Overall, it’s a life-enhancing convenience that represents both an advancement in technology and a triumph in closing the gap between, in several instances, what had once been science fiction and everyday fact in our present-day, very real world. However, the challenge remains for you to be careful and empathetic in how you bring no-UI design to your users, in their homes, in their contexts, in their lives.

When you design without visible interfaces, you unlock new possibilities for accessibility, speed, simplicity, and calm. Remember, no-UI isn’t about removing design; it’s about designing differently. You create experiences that anticipate needs, respond naturally, and respect users’ time and attention. Do it well, and wisely, and you’ll bring technology closer to users, more like a helpful friend, less like a demanding machine. You stop asking users to learn your interface, and instead let the interaction feel like part of their world. Imagine how secure a user in a smart home can feel when they know the brands that serve them do it ethically and with a genuine spirit of customer care. That takes calm computing to a level where a no-UI design can win, hands down.

Discover essential points about no-UI design and a treasure trove of related subjects with our course AI for Designers.

Enjoy our Master Class The AI Playbook: How to Capitalize on Machine Learning with Eric Siegel, Ph.D., CEO of Gooder AI and Author of “The AI Playbook” and “Predictive Analytics” for a wealth of helpful insights.

Explore how to design to blend in with the background, with our article No-UI: How to Build Transparent Interaction.

Check out our article One Size Fits All? Definitely Not in Task-Oriented Design for Mobile & Ubiquitous UX for helpful insights into modern design and how to do it well.

No-UI design removes screens and buttons entirely, and it relies on environmental triggers, automation, and natural inputs like voice, motion, or biometrics to help users achieve their goals without interacting with a visible interface. Traditional UI design, by contrast, builds on visuals, menus, icons, and screens that users navigate.

No-UI excels in scenarios where screen-based interactions feel unnecessary or distracting, such as smart homes, voice assistants, or wearables. It minimizes friction, shortens task flows, and aims to feel effortless. Instead of focusing on the “how,” no-UI focuses on the “what”: delivering outcomes, not steps.

Get a deeper dive into UI design to take away some key insights about how different interface types call for different design approaches.

No-UI and invisible design are closely related but not identical. Both aim to reduce visible interface elements and minimize user effort. No-UI refers specifically to removing screens and visual controls, designing experiences that occur through sensors, automation, or natural input like voice. Invisible design is broader: it can involve minimal UIs, subtle cues, and interactions that users barely notice but still interact with, things like haptic feedback or contextual popups.

Understand more about ubiquitous computing for important insights into technology that surrounds yet “melts” into the background to help users.

Effective no-UI design reduces cognitive load, removes friction, and speeds up user interactions by eliminating the need to navigate screens or menus. It works especially well in hands-busy or eyes-busy situations, such as driving, cooking, or exercising.

By using sensors, context, and automation, no-UI systems act without requiring deliberate input, freeing users to focus on the task, not the technology. Plus, it supports accessibility by relying on natural behaviors like speaking, moving, or location-based triggers.

No-UI encourages habit formation and deeper product integration into users’ lives, too. When done well, it creates intuitive, seamless experiences that feel more like a helpful assistant than a tool users must operate.

Explore the realm of accessibility for insights into how to design better experiences for users with disabilities as well as all other users.

No-UI design follows four core principles: anticipation, context-awareness, automation, and feedback. The system should predict user needs based on behavior, location, or time. It must act appropriately without explicit commands, but offer enough control and clarity to build trust.

Context-awareness ensures the system reacts intelligently to real-world variables, like light levels or proximity. Automation simplifies tasks by removing steps, but it must be reversible or adjustable. Feedback, though not visual, is essential: through sound, haptics, or physical change, the system must let users know something happened. No-UI isn’t about removing design; it’s about designing for the right kind of invisible interaction that feels natural, respectful, and effortless.

Harvest insights about haptic design and haptic interfaces to help design appropriate experiences users can feel are helpful.

Begin by identifying where traditional UIs create friction, such as repetitive tasks or interruptions. Then, ask: What user goals could be achieved without screens?

Use context, such as time and location, sensors (motion, sound), or behaviors (habits, routines) to inform actions. Sketch flows where technology responds to user intent passively or with minimal input.

Prototype using simple triggers, like voice or presence detection, and test how users react. Prioritize clarity: even invisible interactions need feedback. Ensure accessibility, too, and that error recovery is possible.

Check out some fascinating and helpful points to help with your designs, in our article How to manage the users’ expectations when designing smart products.

Yes, always design fallback UI options. Not all users will trust or understand invisible systems, and no-UI interactions may fail due to sensor errors, ambient noise, or unusual user behavior.

Fallbacks offer clarity and control, and are essential “safety ledges” especially for onboarding, troubleshooting, or accessibility. They can be minimal, like a companion app, a voice prompt, or a manual switch, but you’ll need to include them in your design solution. Remember, users should feel empowered, not trapped.

Fallbacks let users verify system actions and adjust preferences, too. A good no-UI experience feels effortless but never confusing. So, when you offer a visible alternative, you’ll give users confidence and ensure the system remains usable across diverse contexts and scenarios.

Pick up a treasure trove of helpful insights to help with your design endeavors, in our article How Can Designers Adapt to New Technologies? The Future of Technology in Design.

Products that perform routine, context-aware, or hands-free tasks work best with no-UI design. They include smart home devices (lights, thermostats), wearable fitness trackers, voice assistants, and automotive systems.

No-UI works well in environments where users benefit from reduced screen time, too, such as in kitchens, gyms, or healthcare. The key is predictability: if a product’s primary function is simple, repeated, or tied to external signals like time, motion, or location, no-UI can streamline it significantly. For example, smart locks that open when an authorized user approaches remove unnecessary steps.

Speaking of healthcare, explore valuable insights to help design for this industry, with our article Healthcare UX—Design that Saves Lives.

Yes, you can indeed apply no-UI design in digital apps. Many apps already use no-UI principles through background automation, predictive behavior, and minimal interruptions. For example, a fitness app that automatically tracks workouts using motion data or a calendar app that offers smart suggestions is practicing no-UI design.

It’s not about the absence of screens; it’s about minimizing direct interaction when it isn’t needed. Physical products often use hardware triggers (sensors, buttons), but apps can respond to passive input like user routines, location, or activity data. The goal is the same: anticipate needs, simplify flows, and make the interaction feel like it “just happens.”

Get a greater grasp of the “core ingredients” users need and want from products, with our article The 7 Factors that Influence User Experience.

AI (artificial intelligence) and voice systems bring no-UI design to life by interpreting natural language, behavior, and context. Voice assistants like Alexa or Siri let users complete tasks, like setting reminders or controlling smart devices, without visual interaction. AI analyzes patterns and adapts to user habits, enabling proactive recommendations or actions. For instance, Google Assistant may suggest leaving early for a meeting based on traffic, without prompting. These systems must offer strong feedback through voice or sound to maintain trust. It’s also important to address ambiguity in language and ensure privacy. When done well, AI and voice create fluid, human-like interactions that reduce cognitive load and improve accessibility.

Venture into the world of Voice User Interfaces (VUIs) for a wealth of helpful insights to design exceptional voice-controlled experiences with.

Start by anchoring interactions in real-world logic. Use metaphors which users already understand, like speaking, walking, or gestures, to design intuitive triggers. Provide clear, immediate feedback through audio, haptics, or environmental changes (like lights dimming).

Keep interactions simple and predictable to prevent confusion. Let users interrupt, confirm, or undo actions easily. And ensure context matches the system’s response; users should feel the system “gets” their intent. Avoid over-automation; too much can feel intrusive and erode trust.

Test in realistic conditions and include users from diverse backgrounds to spot edge cases. Your goal is to create interactions that feel like second nature, ones that are subtle, responsive, and always respectful of the user’s control.

Find essential insights about the most powerful force between brand and user, in our article Trust: Building the Bridge to Our Users.

No-UI design can frustrate users if the system misinterprets intent, offers unclear feedback, or lacks control. Here are some main areas of potential concern:

Invisible interactions may confuse new users or those with accessibility needs.

Users might not realize an action occurred, or worse, that the system acted incorrectly.

Over-automation can feel intrusive or even creepy if users don’t understand why something happened.

There are technical risks: sensors fail, contexts change, and AI makes mistakes.

Privacy concerns rise when data collection becomes invisible, and many users can worry about what’s being recorded or monitored and where the recorded data may go, be stored, and be examined.

So, it’s vital to provide transparency, fallback options, test extensively across edge cases, and design ethically and act in the user’s best interests. No-UI should empower users, not remove their agency or create uncertainty, and certainly never compromise their privacy unethically by spying or the like.

Dig deeper for essential design insights to understand AI and the world of designing with it, with our article AI Challenges and How You Can Overcome Them: How to Design for Trust.

Hui, T. K. L., & Sherratt, R. S. (2017). Towards disappearing user interfaces for ubiquitous computing: Human enhancement from sixth sense to super senses. Journal of Ambient Intelligence and Humanized Computing, 8, 449–465.

This open-access article explicitly addresses “disappearing user interfaces” (DUIs): interfaces that move away from traditional screen-based interaction toward ambient, sensor-driven, content-centric design. The authors propose a taxonomy of DUIs that leverage multisensory engagement, data fusion, and contextual awareness to support intuitive interaction for non-technical users. Use cases include wearables and IoT systems, framed within the concept of human enhancement toward a “sixth sense” or “super senses.” This paper provides a foundational scholarly account of “no-UI” design within ubiquitous computing and is particularly useful for understanding how ambient and invisible interfaces support seamless user experiences.

Deshmukh, A. M., & Chalmeta, R. (2024). User experience and usability of voice user interfaces: A systematic literature review. Information, 15(9), 579.

This systematic literature review classifies and synthesizes academic research on Voice User Interfaces (VUIs), focusing on user experience and usability. The authors reviewed 61 publications and organized them into six thematic research categories, ranging from VUI interaction principles to evaluation methods. They highlight the fragmented state of VUI research and call for more consistent evaluation frameworks. Notably, while VUIs offer hands-free, natural interaction and growing applicability, they still face usability limitations, such as recognition errors, limited feedback, and poor learnability, that restrict their potential as full GUI alternatives. The study provides a clear overview of current challenges and directions for future work.

Remember, the more you learn about design, the more you make yourself valuable.

Improve your UX / UI Design skills and grow your career! Join IxDF now!

You earned your gift with a perfect score! Let us send it to you.

We've emailed your gift to name@email.com.

Improve your UX / UI Design skills and grow your career! Join IxDF now!

Here's the entire UX literature on No-UI Design by the Interaction Design Foundation, collated in one place:

Take a deep dive into No-UI Design with our course AI for Designers .

Master complex skills effortlessly with proven best practices and toolkits directly from the world's top design experts. Meet your expert for this course:

Ioana Teleanu: AI x Product Design Leader (ex-Miro, ex-UiPath). Founder, UX Goodies.

We believe in Open Access and the democratization of knowledge. Unfortunately, world-class educational materials such as this page are normally hidden behind paywalls or in expensive textbooks.

If you want this to change, , link to us, or join us to help us democratize design knowledge!