Artificial Intelligence (AI) is exceedingly effective at processing large volumes of data; saying that, though, it still calls for human guidance when it comes to applying it in the world—not least since its impacts are impacts that humans will notice. Watch as AI Product Designer Ioana Teleanu introduces the two main types of AI research tools—insight generators and collaborators—and explains how you can apply them in UX research.

Show

Hide

video transcript

- Transcript loading…

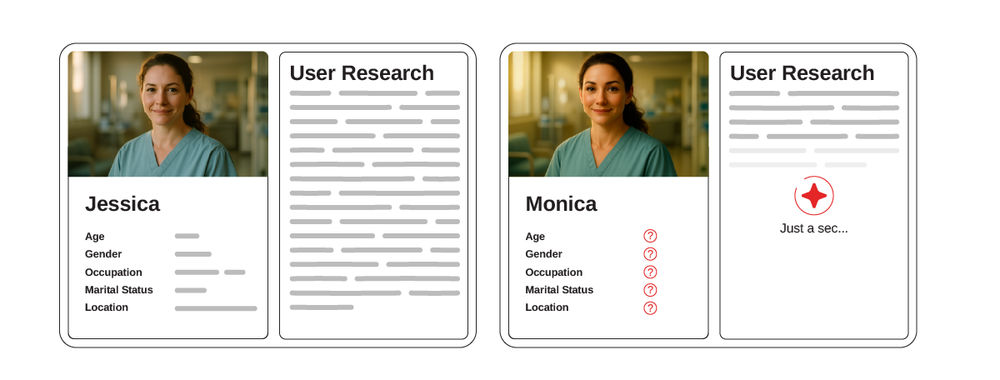

As Ioana explained, what insight generators have as their primary goal is to provide concise and informative summaries of user research sessions. They’re a form of narrow AI, and they can analyze the transcripts of a research session. With that said—and here’s the especially important bit—they don’t take any additional information into account, like context, past research, or background details about the product or its users. That means that insight generators can’t interpret the complete picture of user interactions and experiences.

Collaborators are more advanced—they can get trained with human-generated interpretations and context, and that includes research goals, questions, and product background. They’re also an example of Narrow AI, not General AI, though, and that’s despite their advanced capabilities. Collaborators can recommend thematic analysis tags, and they can generate insights which are based on the transcripts and contextual data. Collaborators can analyze researchers' notes to create more nuanced themes and insights, too. In spite of that, they’ve got difficulties in handling visual data, too, as well as issues with citation and validation—and then there’s the potential for bias to creep into research results.

Bias in AI can come out of training data (systematic bias), data collection (statistical bias), algorithms (computational bias), or—indeed—human interactions (human bias). Bias is a distorter and can cause problems—not to mention the ethical implications of biased decision-making in design. So, to lessen bias, it’s vital to use diverse and representative data, test and audit AI systems, and give very clear guidelines for ethical use—and that’s how to aim for fair and unbiased AI decisions that benefit everyone.

The Take Away

AI research tools are powerful assistants that can reduce cognitive load, support decision-making (by processing large volumes of data), automate tasks (like image formatting and text resizing), offer deeper insights into human behavior and usage patterns, create prototypes and a whole variety of visual assets, and excel at zeroing in on usability issues.

There are two types of AI research tools, and these are insight generators and collaborators. Insight generators summarize user research sessions—by analyzing transcripts. That said, they can’t consider additional context, a point that limits their understanding of user interactions and experiences. Collaborators give out more context-aware insights through researcher input, but—despite this—they still struggle with visual data, citation, validation, and potential biases.

These tools’ limitations are things you’ve got to overcome; so, it’s imperative that you exercise caution, keep human oversight going, evaluate outputs (and critically so), be mindful of potential biases that can arise, and use AI as a supplementary—not your sole—decision-making source.

References

Hero image: © Interaction Design Foundation, CC BY-SA 4.0