If you are new to designing voice user interfaces, you may quickly find yourself unsure of how to create great user experiences. The way users interact with voice user interfaces is very different from how they interact with graphical ones. Not surprisingly, users' expectations for voice user interfaces are that the interaction will be more like communicating than using technology, because they associate voice and talking to other people, not technology. Voice user interfaces are growing in number, sophistication and reasonably priced availability. Here, you will learn what the users expect from voice communication and get practical guidelines for how to design great voice user interfaces.

To be able to create great user experiences with voice interactions, you need an understanding of how people naturally communicate with their voices, and you need to understand the fundamentals of voice interaction. Let’s start by examining some of the attributes of voice communication between people. From there, we’ll give you some practical guidelines based on colossal internet retailer Amazon’s best practices for how to create voice interaction between user and technology. With their Alexa voice assistant, Amazon was one of the first to move forward and get commercial success and user praise for its voice-interactive product.

Voice User Interfaces

They’re everywhere. We can find voice user interfaces in phones, televisions, smart homes and a range of other products. With the advances in voice recognition and smart home technology, voice interaction is only expected to grow. Sometimes, voice user interfaces are optional features of otherwise graphical user interfaces—e.g., when you use it to search for movie titles on your TV. Other times, voice user interfaces are the primary or only way to interact with a product, such as the smart home speakers Amazon Echo Dot or Google Home.

Author/Copyright holder: Apple Incorporated. Copyright terms and licence: Fair Use.

Apple’s Siri interface is an example of a voice user interface that coexists with a graphical user interface, here an iPhone.

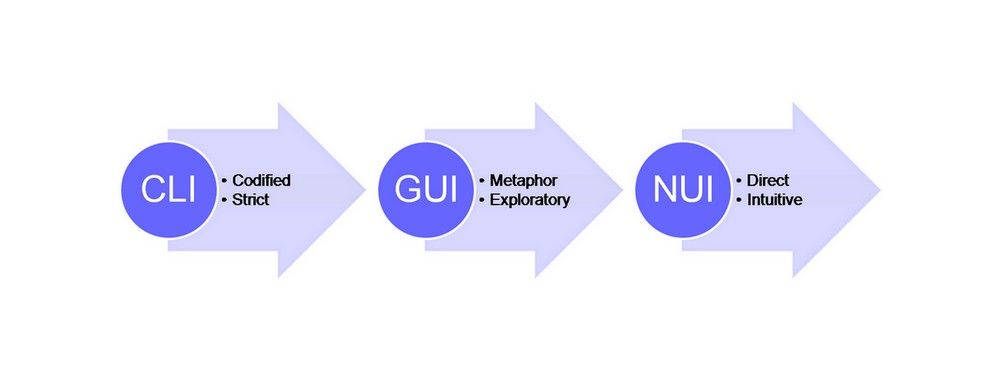

If you add voice user interfaces to your UX design, you can use voice interaction to make interaction with existing graphical interfaces more convenient for the user or you can also design your own applications for products that only use voice interaction, something which is very handy if you want to work with smart environments. However, voice user interfaces are very different from graphical user interfaces—you cannot apply the same design guidelines. In voice user interfaces, you cannot create visual affordances. Consequently, looking at one, users will have no clear indications of what the interface can do or what their options are. At the same time, users are unsure of what they can expect from voice interaction, because we normally associate voice with communication with other people rather than with technology.

“The great myth of our times is that technology is communication.”

—Libby Larsen, American composer

Author/Copyright holder: Guillermo Fernandes. Copyright terms and licence: Public Domain.

Author/Copyright holder: Guillermo Fernandes. Copyright terms and licence: Public Domain.

The Amazon Echo Dot speaker uses the voice user interface Alexa as the primary form of interaction.

What do users expect from voice communication?

“Speech is the fundamental means of human communication. Even when other forms of communication - such as writing, facial expressions, or sign language - would be equally expressive, (hearing people) in all cultures persuade, inform and build relationships primarily through speech.”

—Clifford Nass and Scott Brave, Stanford researchers and authors

In their book on voice interaction, Wired for Speech, Stanford researchers Clifford Nass and Scott Brave argue that users to some extent relate to voice interfaces in the same way that they relate to other people. Because speech is so fundamental to human communication, we cannot completely disregard our expectations for how speech communication normally takes place, even if we are fully aware that we are speaking to a device rather than a person. That means—to understand the user’s underlying expectations of voice interfaces—we must understand the principles that govern human communication. In other words, we need to take a look in the mirror before we can determine what makes a design of this type either latch with users or end up frustrating them, remembering, too, that users will become very frustrated very soon if things go wrong.

In her classical book, Plans and Situated Actions: The Problem of Human-Machine Communication, Lucy Suchman describes human communication as situated and context bound. A lot of information is not naturally contained in the spoken message itself when people have a conversation. We use our knowledge of the context to create a shared meaning as we listen and talk. Suchman uses the following example (from a 1977 book by Marilyn Merritt) of a brief conversation where context knowledge is necessary to understand the meaning of what is being said:

A: “Have you got coffee to go?”

B: “Milk and Sugar?”

It may not be immediately apparent to you, but if you think about the above dialogue, you will see that you’ll have already made a lot of inferences about the context under which such a conversation might take place. For example, you might have already guessed the location: a coffeehouse or at least some sort of food outlet, and the roles of the people involved (client, worker). If this conversation made sense to you, then it means that you must understand what a coffeehouse is, what it means to buy something and that a lot of people like milk and sugar in their coffee. On top of that, you also need to understand that when speaker A asks whether a coffeehouse has got coffee to go, she wants to buy coffee—even though she does not specifically state that wish. ‘To go’ is an idiom, meaning it is a construction that means something different from how a typical pairing of ‘to’ and ‘go’ works. Instead, it conveys the meaning that a customer wants to take a purchase (almost always food or drink) out of the establishment rather than sit in and enjoy it there. In the same way as we talk about ‘throwing’ a party, we don’t mean ‘to go’ in the literal sense—i.e., a coffee cup will not sprout a pair of legs and walk out of the shop with you; needless to say, when was the last party you attended where you could have picked up everyone and everything around you and thrown them?

Also, when speaker B answers with the question about milk and sugar, you must understand the implied ‘yes’. In text, the conversation looks strange, but most people standing in line at a coffeehouse who overheard the conversation would be able to understand it without any problems. We do not need to have the message spelt out, as we already understand the context.

People expect to be understood when they express themselves as in the previous example. This is where we as designers have to watch ourselves (another idiom, incidentally, for being careful, but we’re well within our rights to observe ourselves, too). The danger zone we enter as we work is this: for voice recognition technology, grasping all the necessary contextual factors and assumptions in this brief exchange is next to impossible. If you were to design a voice-operated coffee machine, you would probably have to break with the users’ expectations and make them state more clearly that they would like to purchase a cup of coffee. Until the state of the art changes to the point that we can stretch to accommodate idiomatic expressions, we’ll have to put up with the fact that we’ll need to make our users appreciate the need for keeping their phraseology stripped back, direct and basic. That way, the voice-controlled sensors won’t be thrown by ambiguity or what they might register as indecipherable signals. Apart from that, disciplining ourselves in our approach tends to have the benefit of keeping us mindful of another point—English is a very quirky language, often having four or five words for the same item where other languages have, at best, two.

On that note, let’s see some pointers for how you should guide the user to talk to a voice user interface and how you handle the differences between voice user interfaces and graphical user interfaces.

Author/Copyright holder: Jason Devaun. Copyright terms and licence: CC BY-ND 2.0

Author/Copyright holder: Jason Devaun. Copyright terms and licence: CC BY-ND 2.0

When people communicate, we use our knowledge of the context to create a shared understanding.

Guidelines for Designing Voice user Interfaces

“Words are the source of misunderstandings.”

―Antoine de Saint-Exupéry, aviator and author (the above is from The Little Prince)

There is no way to get around the fact that users often have unrealistic expectations for how they can communicate with a voice user interface. Part of that has to do with the problem that—at least by the late 2010s—the speed of advancements and their introductions were happening at an unprecedentedly brisk pace. Humans are still on their ‘honeymoon’ with this relatively fresh phenomenon. That’s why we need to be especially mindful in our representation of our designs (hence why the Echo Dot looks like a wonderfully deep ‘dot’ rather than a bust-like sculpture with a moving jaw and eyes that light up).

If you look at the online reviews for Amazon’s Echo Dot speaker, it is clear that some people form a close bond with their speaker in a way that more resembles a pet than a product.

“Artificial intelligence? Perhaps. But people rarely make me smile or laugh. Alexa rarely fails to do so. And the enjoyment I get from having her in my home is anything but ‘artificial.’”

—Carla Martin-Wood, a very happy Amazon Alexa customer on Amazon.com

Since you cannot fully live up to the user’s expectations of a natural conversation partner, it becomes even more important to design the voice user interface so that it contains the right amount of information and handles the user’s expectations elegantly. You can use these guidelines inspired by Amazon’s best practices for how to create voice interaction skills for Alexa as a starting point:

Provide users with information about what they can do.

On a graphical user interface, you can clearly show users what options they can choose from. An iPad screen, for instance, is a wonderfully neat set of portals, or doorways that you can enter, and from there enter more specific ones until you get to where you want to be. A voice interface has no way of showing the user what options are possible, and new users base their expectations on their experience with conversations. Therefore, they might start out asking for something that doesn’t make sense to the system or which isn’t possible. In that case, provide the user with the options for interaction. For example, you could have a weather app say: “You can ask for today’s weather or a weekly forecast.” Similarly, you should always provide users with an easy exit from a functionality—by listing ‘exit’ as one of their options.

Where am I?

In graphical user interfaces, users can see when they enter a new section or interface. In voice user interfaces, users must be told what functionality they are using . Users can quickly get confused about where they are, or they might activate a functionality by mistake. That’s understandable—they’re ‘running blind’ in this regard, with only a nearly featureless device (in most cases) to look at. Travelling in the ‘dark’, they’ll tend to get flustered far more easily than they would with a visual-oriented device (as a species, after all, we’re most attuned to relying on our eyes). Thus, ,when the user e.g. asks for today’s weather it is a good idea to say: “Today’s weather forecast is mostly sunny and dry” rather than just “sunny and dry.” This lets the users know which functionality they are using. For instance, if users want to see if they need to water their outdoor plants while they go on a week’s holiday, they will almost certainly want to hear a forecast for the next seven days.

Author/Copyright holder: Ramdlon. Copyright terms and licence: CC0 Public Domain

Author/Copyright holder: Ramdlon. Copyright terms and licence: CC0 Public Domain

In interaction with voice user interfaces, the user has no visual guidance, and getting lost will happen all too easily. It is important to inform the user what functionality she is using and how to exit it.

Express intentions in examples.

When people talk, they often don’t express their full intentions. We’re used to taking shortcuts, using slang, and intimating and hinting at what we really want a lot of the time. That may work fine and well around other people, who will tend to ‘get’ what we’re ‘getting at’. However, in voice user interfaces, expressing intentions is necessary for the system to understand what the user wants. Moreover, the more information about her intentions a user includes in a sentence—the better. Amazon uses the app Horoscope Daily as an example. A user can say, “Alexa, ask Astrology Daily for the horoscope for Leo.” and get the information she wants right away, instead of first saying, “Alexa, ask Astrology Daily for the horoscope.” and then asking for the horoscope she wants. Users might not realise this on their own, but you can demonstrate it to them if you use full intentions in all your examples of an interaction – e.g., in your written user guide or when the user asks the system for help or more information in relation to an interaction.

Limit the amount of information.

When users browse visual content or lists, they can go back to information they overlooked or forgot. That is not the case with verbal content. With verbal content, you need to keep all sentences and information brief so that the user does not become confused or forget items on a list. Amazon recommends that you do not list more than three different options for an interaction . If you have a longer list, you should group the options and start by providing the users with the most popular ones. Tell them that they can ask for more options by asking if they would like more options. User forgetfulness is only half the issue here, though. The ‘flip side’ of that is the frustration that will boil up within them if they get taken on a long, meandering ‘scenic route’ when all they want to do is achieve a simple goal. Have you ever become annoyed by the long menus offered when you call a company and get a robot man or woman rattling off a list of options and telling you to press ‘1’ for this, ‘2’ for that, etc.?

Use visual feedback.

If possible, use some form of simple visual feedback to let the user know that the system is listening. Users get frustrated if they are unsure of whether the voice user interface has registered that they are trying to interact with it. Think of a phone conversation when you’ve been speaking and hear only a prolonged silence, prompting you to ask the other party if he or she is still there. If you only use voice feedback to tell the user whether the system knows the user is trying to interact with it, the user must wait until she is done talking before she knows if the system has heard or not. There’s a way to short-circuit the risk of their having to go, “Did you get that?” or “Hey, are you listening?” If you use visual feedback to let users know that the voice user interface is listening, the user can see right away that what she is saying is being registered (similarly to when we talk to other people and can see by their nonverbal communication that they are listening). Amazon’s Echo Dot handles this masterfully in that, on hearing you say ‘Alexa’, the bluish light swirls around the top rim of the device, signalling that Alexa’s ‘all ears’ (another idiom, we should add; we don’t recommend designing a device that features multiple ears protruding from its surface).

The Take Away

To design great voice user interfaces, you must find an elegant way to provide users with missing information about what they can do and how they can do it without overwhelming them. You must also handle the expectations users have from their experience with everyday conversations. Human communication is context bound, but in voice interaction, users must be guided to how to express what they want in a way that the system can understand. You can help them by providing information about what they can do and what functionality they are using, telling them how to express their intentions in a way that the system understands, keeping sentences brief and by providing visual feedback so they know if the system is listening. Voice user interaction may pose more of a challenge in some aspects than a graphically based system; nevertheless, it’s fair to say that this mode will become more prevalent as more aspects of everyday life feature voice-controlled interaction. So, the time is right to make sure you can get it done right.

References & Where to Learn More

Course: UI Design Patterns for Successful Software

Lucy A. Suchman, Plans and Situated Actions: The problem of human-machine communication, 1985

Clifford Nass & Scott Brave, Wired for Speech: How Voice Activates and Advances the Human Computer Relationship, 2007

See all of Amazon’s guidelines for Voice Interaction here.

Hero Image: Author/Copyright holder: Marc Wathieu. Copyright terms and licence: CC BY-NC-ND 2.0